This is the main module of the pyMagnetics pakcage. Its purpose is toprovide tools for retrieving, manipulating, and visualizing DIII-D magnetics data.

The module is dependent on almost all of the other modules in the package, and thus includes to dependence on Tom’ Osborn’s pyD3D package data module.

When automating array manipulation, please keep the following notes in mind:

The most basic element of this module is the Sensor object. These objects have data retrieval, geometric visualization, and data visualization method built into them. However, it is often burdensom to handle many Sensors in parallel. The Array objects consist of multiple Sensors, neatly organized and with the Sensor methods mentioned above nicely streamlines for your convinience.

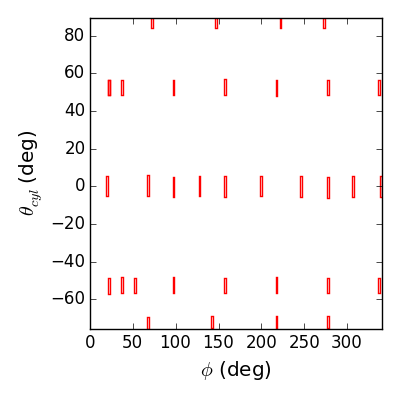

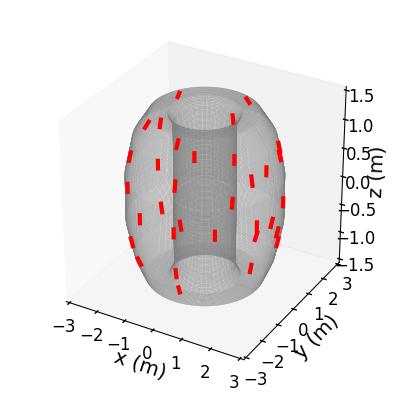

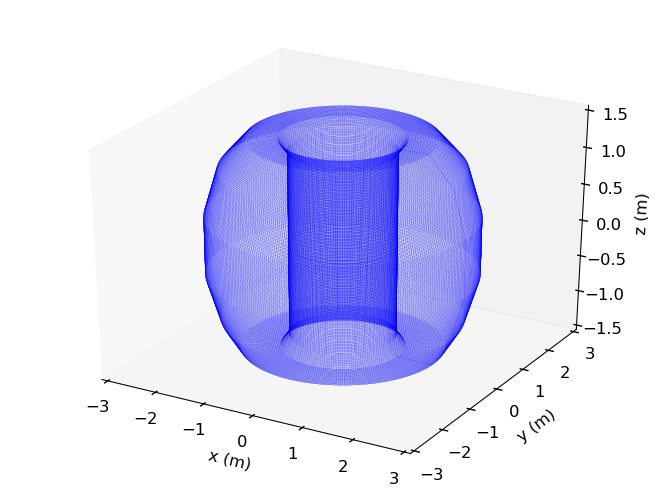

Lets look at a large array, consisting of all the mpid probes on the low field side:

>>> lfsbp = differenced_arrays['LFS MPIDs']

>>> f2d = lfsbp.plotprobes(dim=2,fill=False,color='r',legend=False)

>>> f2d.savefig(__packagedir__+'/doc/examples/magnetics_2darray.png')

>>> f3d = lfsbp.plotprobes(dim=3,color='red')

>>> f3d = d3dgeometry.vessel.plot3d(wireframe=True,linewidth=0.1,color='grey',figure=f3d)

>>> f3d.savefig(__packagedir__+'/doc/examples/magnetics_3darray.png')

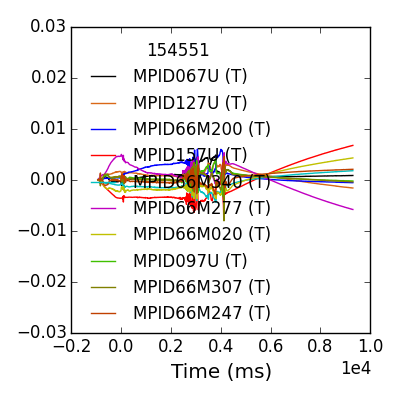

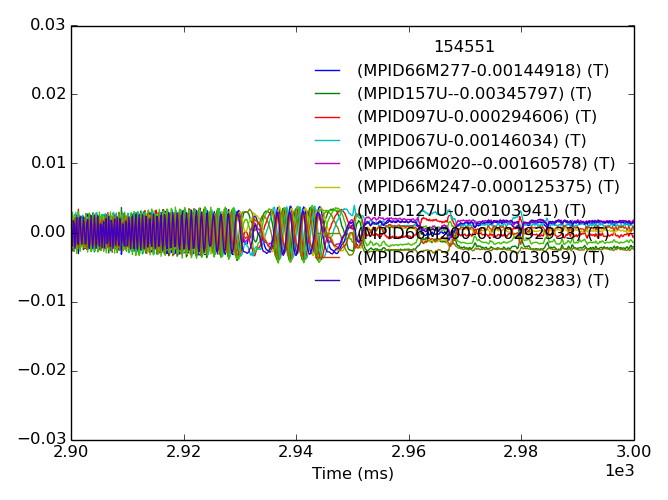

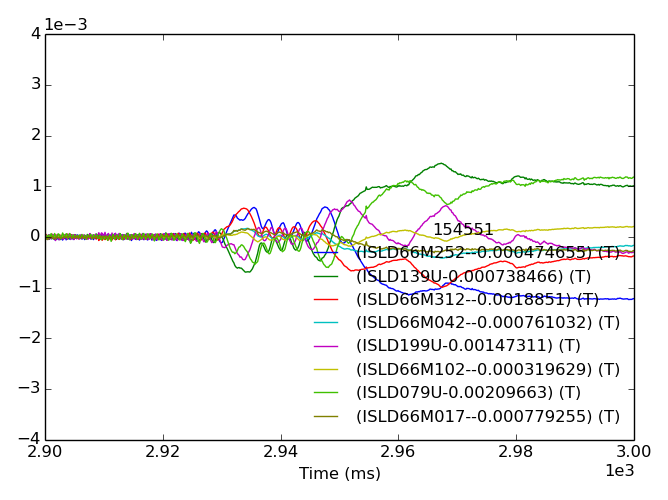

Now lets limit ourselves to a typical 1D array, and look at the raw data.

>>> mpidm = differenced_arrays['MPID66M']

>>> success = mpidm.set_data(154551)

Calling set_data for sensors in MPID66M array.

>>> fdata = mpidm.plotdata()

>>> fdata.savefig(__packagedir__+'/doc/examples/magnetics_data.png')

For large arrays, displaying all the data can be ugly and slow, try out the search keyword (search=‘200’ for example) on your own.

One thing we immediately see is that each sensor has a unique evolution in early time, which often dominates the amplitude in time. This is due to toroidal field and the forming poloidal field during the startup and Ip ramp. We do not trust this as a true 3D signal. A common way of removing the pickup from our studies of later times is to baseline before the time of interest.

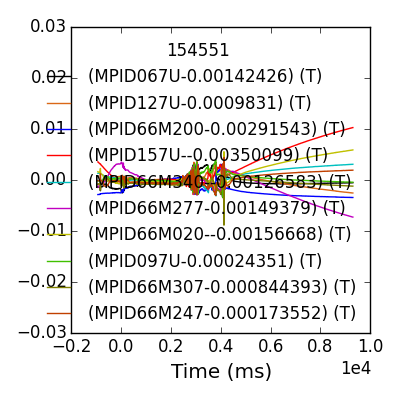

>>> mpidm = mpidm.remove_baseline(2900,2930,slope=False)

Calling remove_baseline for sensors in MPID66M array.

>>> fdata = mpidm.plotdata()

>>> fdata.savefig(__packagedir__+'/doc/examples/magnetics_data_baselined.png')

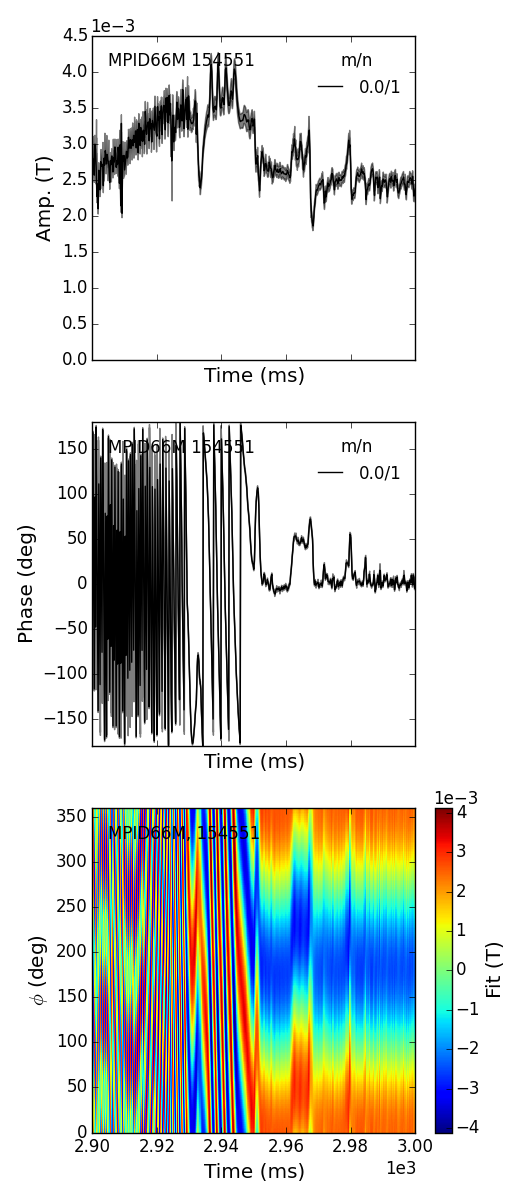

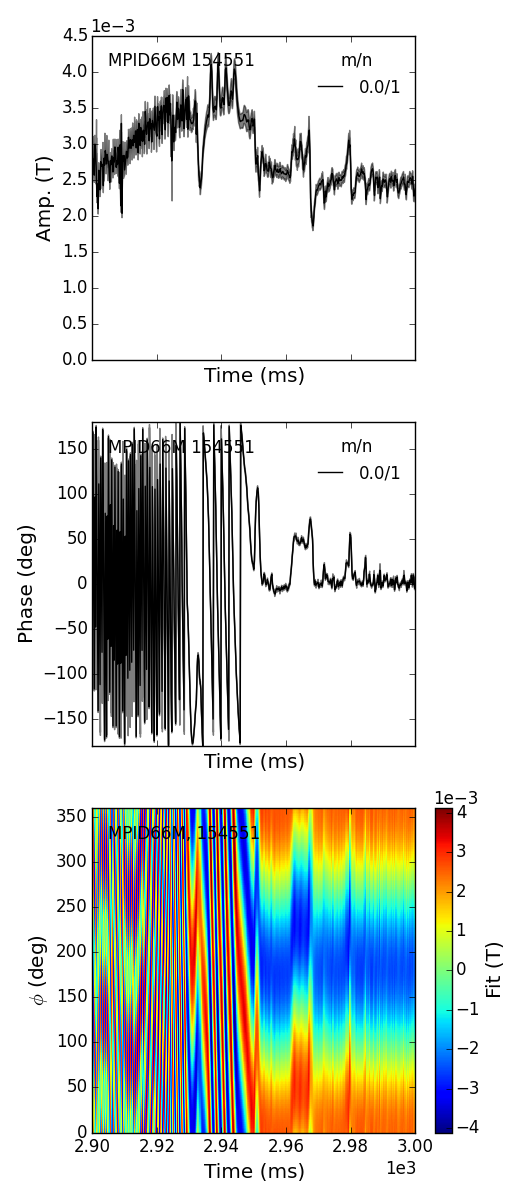

The array object comes with an ossociated ‘fit’ method, which in our 1D example reproduces simple toroidal sinusoid amplitude-phase fitting.

>>> fit = mpidm.fit(ns=[1],ms=[0],xlim=(2900,3000))

SVD found 2 coherent structures of interest

Fitting structure 1

Raw rank, condition number = 2, 1.42

Eff rank, condition number = 2, 1.42

Fitting structure 2

Raw rank, condition number = 2, 1.42

Eff rank, condition number = 2, 1.42

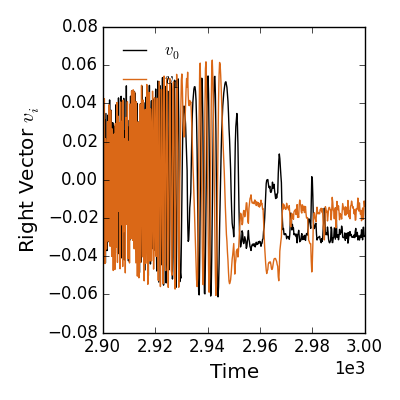

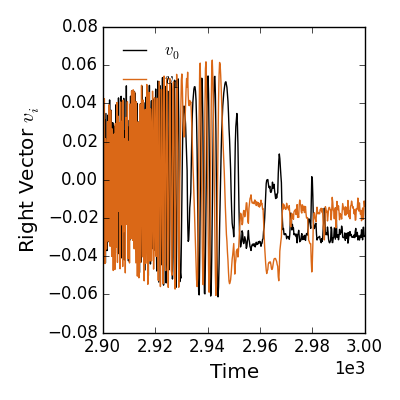

Notice that the fitting method performs an SVD on the data matrix (PxT where P is the number of probes and T is the number of time points) and finds 2 coherent structures. The coherency metric includes eigenmodes progressively until the cumalitive energy is above 98% of the total. In our case, the modes can be understood as like to the sine and cosine components of the rotating n=1 mode. Each mode structure is individually fit to the spacial basis functions corresponding to the specified modes and geometry and then combined in time using the right singular vectors of the data matrix.

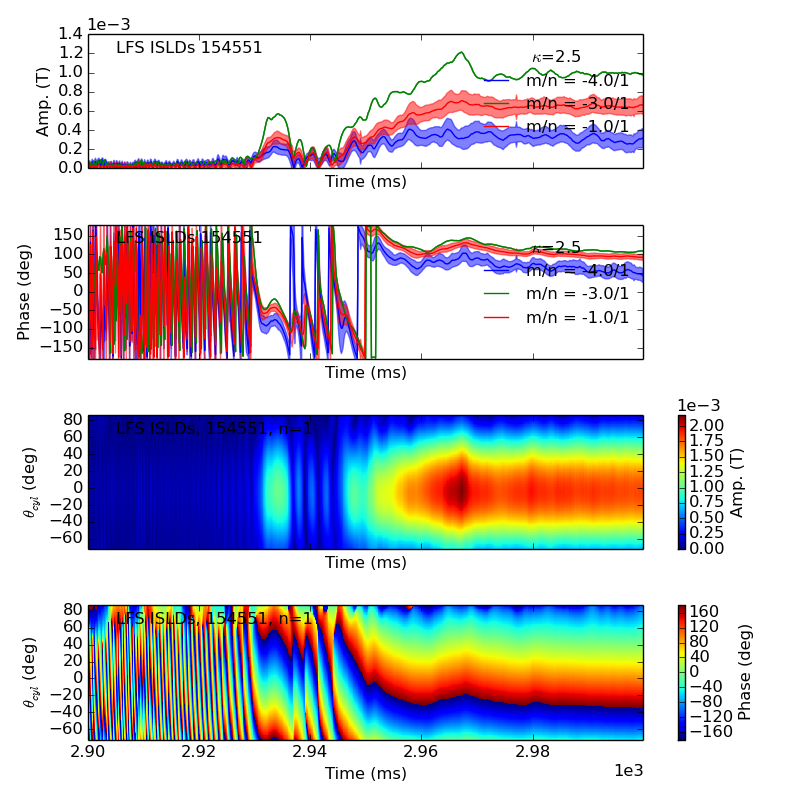

The energy and singular vectors can be viewed using built in methods.

>>> fsvde = fit.svd_data.plot_energy(cumulative=True)

>>> fsvde.savefig(__packagedir__+'/doc/examples/magnetics_svd_data_energy.png')

>>> fsvdt = fit.svd_data.plot_vectors(side='right')

>>> fsvdt.savefig(__packagedir__+'/doc/examples/magnetics_svd_data_time.png')

There is a lot of power in these quantities. There is physics understanding to be gained by isolating distinct coherent structures and their unique time behaviors. From a more practicle standpoint, the energy cut-off reduces the incoherent noise fed into our find spacial fits. Ultimately, however, we want to see the final fit to our basis functions in space and time.

No problem!

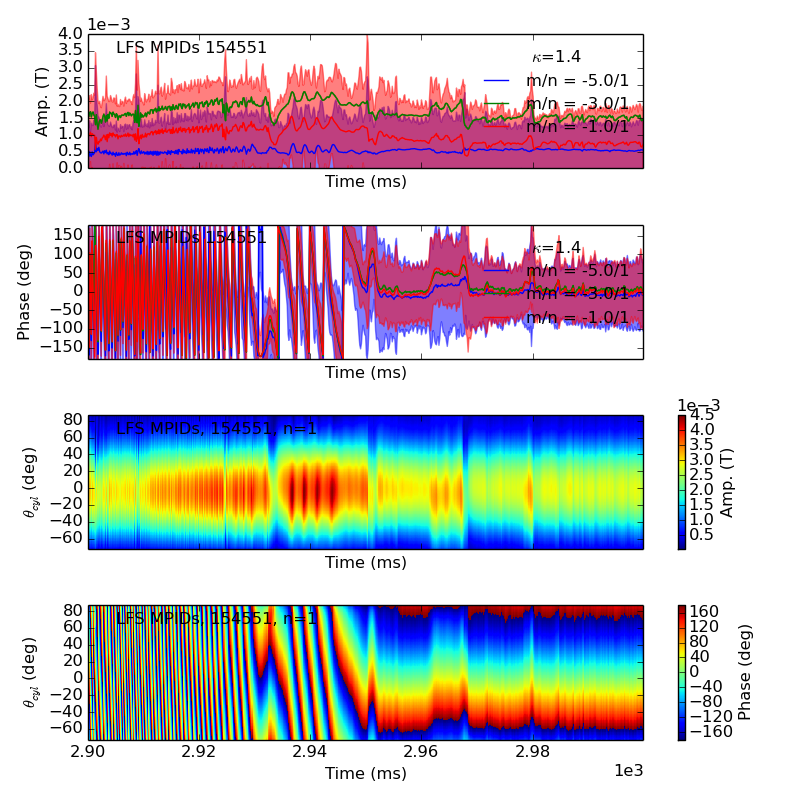

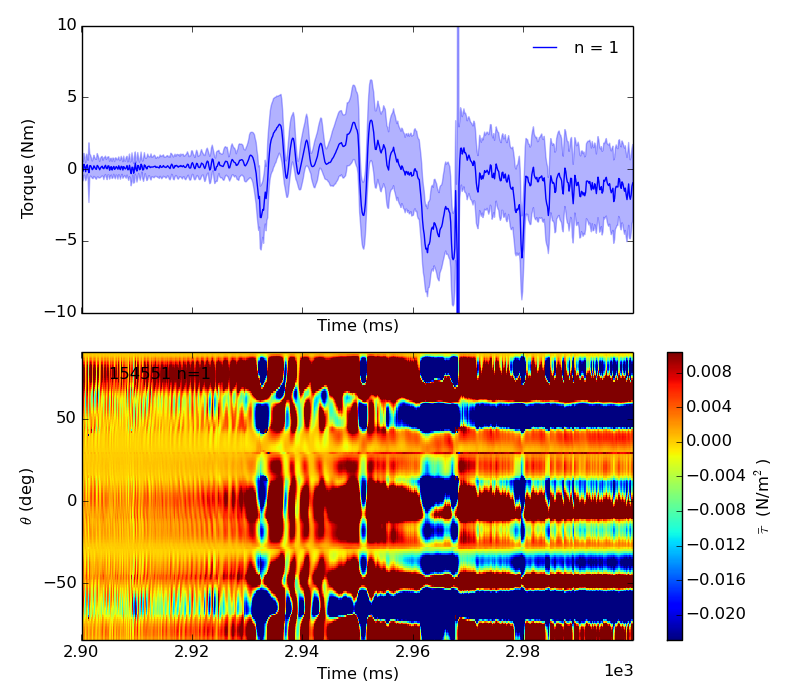

>>> fbasic = fit.plot()

Plotting fit for n = 1

>>> fbasic[0].savefig(__packagedir__+'/doc/examples/magnetics_1dfit.png')

Notice the error in the amplitude and phase of the fit is shown graphically throughout time. The fit plotting function can return multiple plots, lets take a more complicated example to see why.

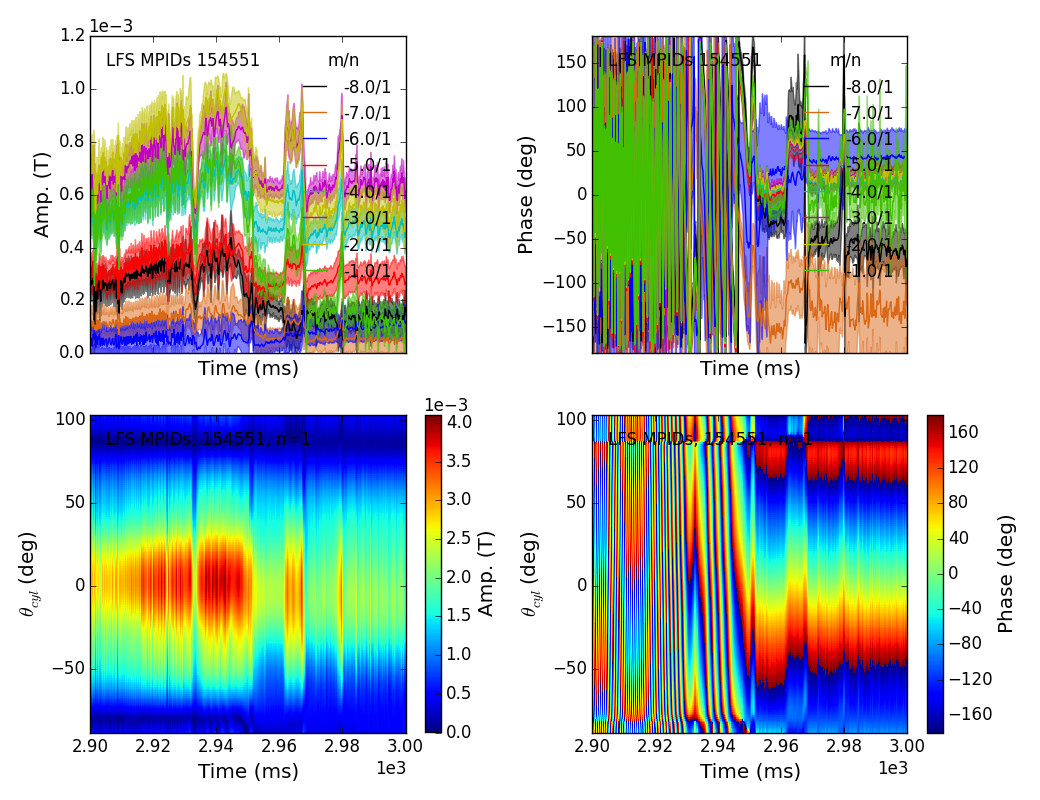

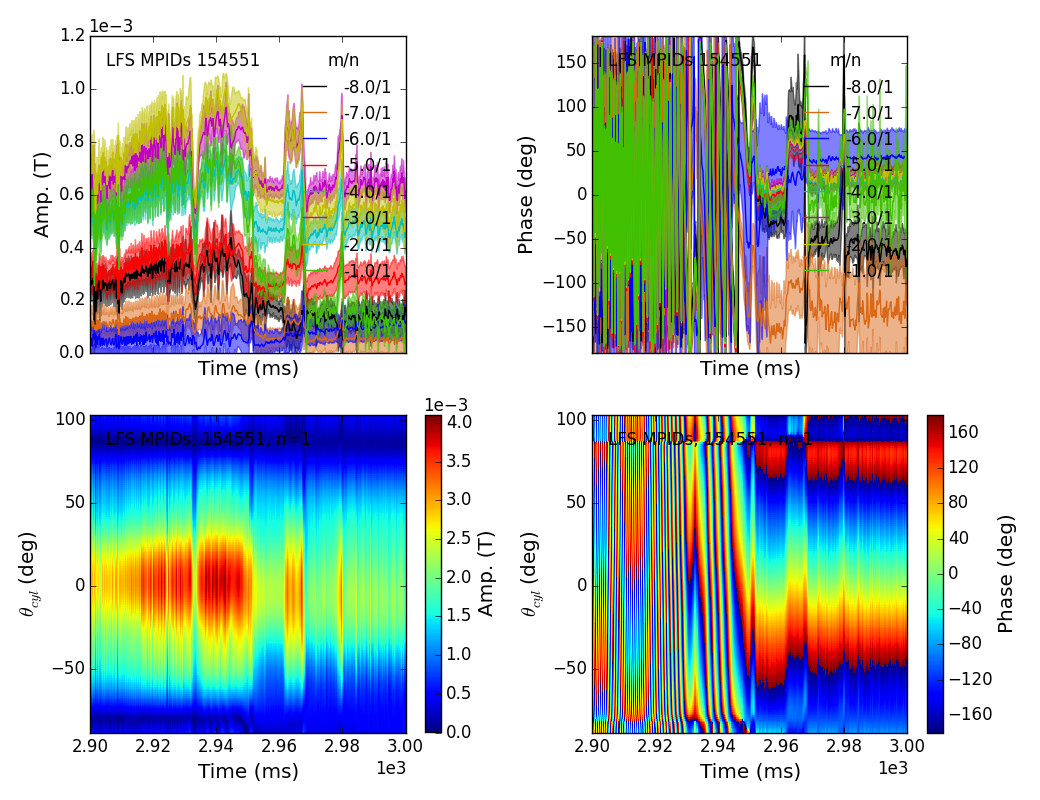

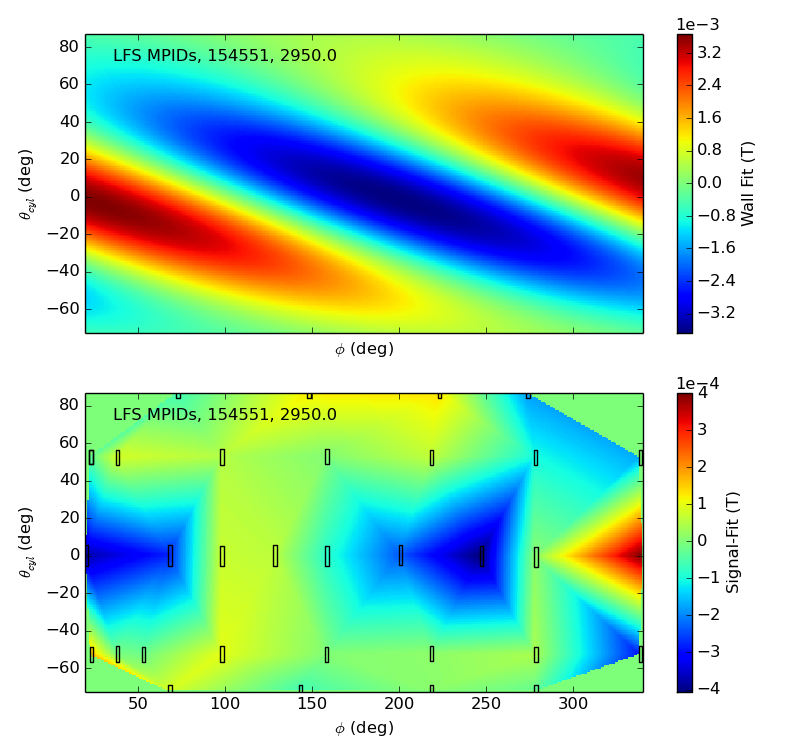

The magnetics module was made to handle 2D fits as naturally as in th 1D case.

>>> success = lfsbp.set_data(154551)

Calling set_data for sensors in LFS MPIDs array.

>>> lfsbp = lfsbp.remove_baseline(2900,2930)

Calling remove_baseline for sensors in LFS MPIDs array.

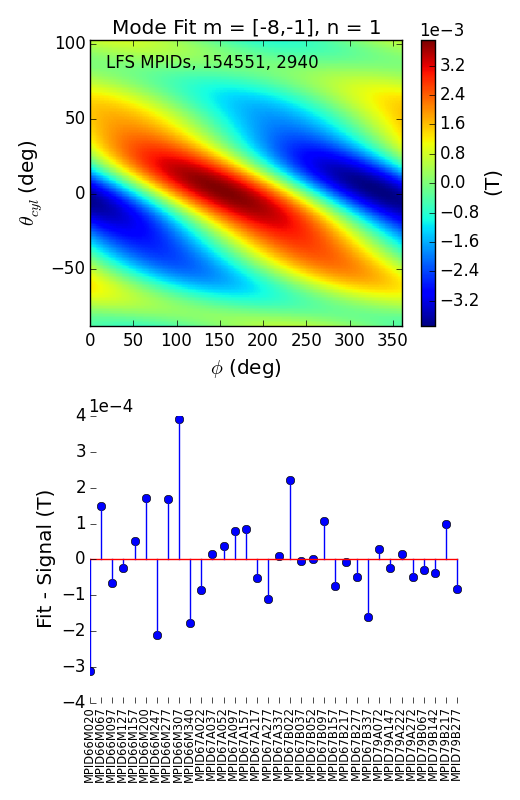

>>> fit = lfsbp.fit(ns=[1],ms=np.arange(-8,0),xlim=(2900,3000))

SVD found 4 coherent structures of interest

Fitting structure 1

Raw rank, condition number = 16, 7.65e+04

Eff rank, condition number = 10, 10

Fitting structure 2

Raw rank, condition number = 16, 7.65e+04

Eff rank, condition number = 10, 10

Fitting structure 3

Raw rank, condition number = 16, 7.65e+04

Eff rank, condition number = 10, 10

Fitting structure 4

Raw rank, condition number = 16, 7.65e+04

Eff rank, condition number = 10, 10

>>> f2d = fit.plot(dim=0)

Plotting fit for n = 1

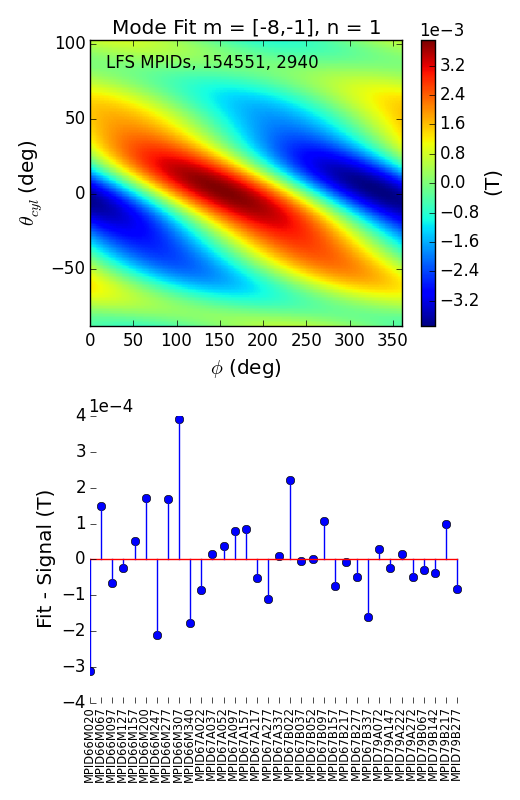

>>> f3d = fit.plot(dim=3,time=2940)

Scroll over axes to change time

>>> f2d[0].savefig(__packagedir__+'/doc/examples/magnetics_2dfit1.png')

>>> f3d[0].savefig(__packagedir__+'/doc/examples/magnetics_2dfit2.png')

That is it! These are the Mode Fits.

These plots are highly interactive. In your ipython session, move the mouse over one of the axes in the second figure and scroll to show the mode evolving in time (spinning, locking, etc.). Try plotting with dim=-2, and watching the amplitude evolve.

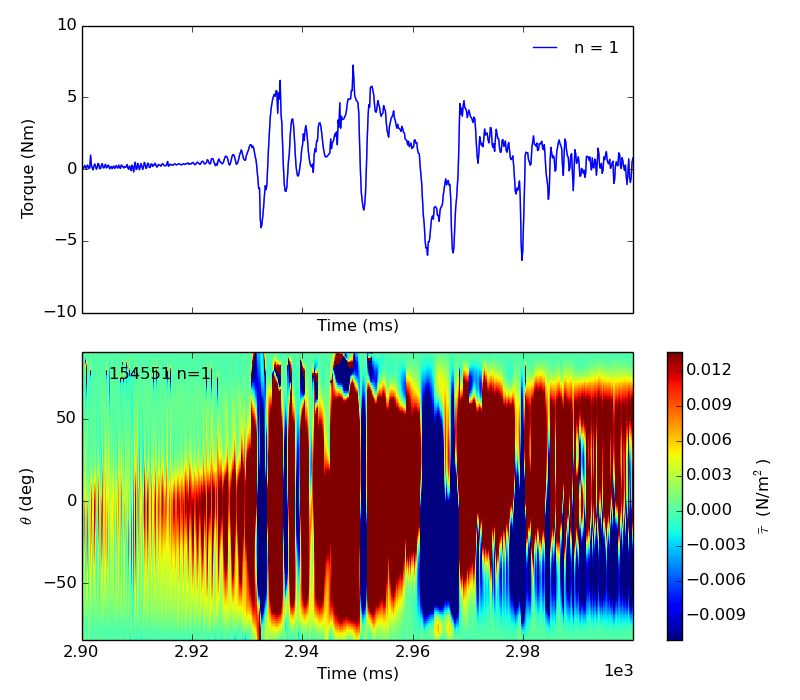

If you want the actual fit parameters, the (n,m) mode numbers and the complex amplitude for each pair can be accessed using the nms and anm attributes. The b_n method gives the amplitude of a single toroidal mode number as a function of the poloidal variable, and interp2d gives the total fit on the 2D space.

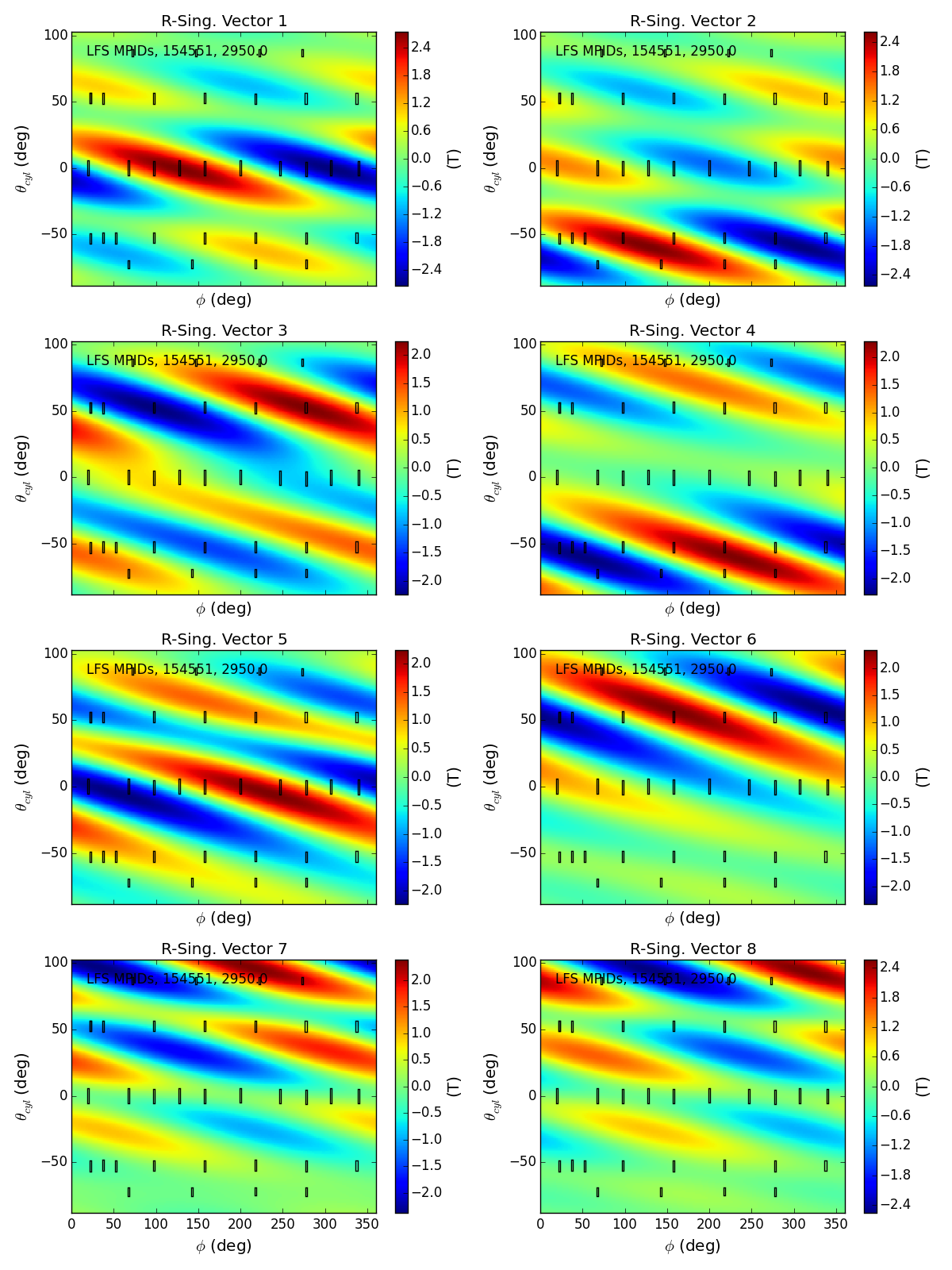

Finally, you may be wondering what the ranl and condition numbers printed for each structure are. These are the rank and condition number of the basis function matrix A used to fit the mode amplitudes x by solving Ax = b where b is array of sensor signals for the structure. A second and completely independent SVD is done on the basis matrix A, and the singular values below a certain condition number (a key word argument of the fit), they are removed. To see the singular values, right and left singular vectors of this SVD use the methods of the fit.svd_basis instancce. To visualize the eigenmodes of this basis matrix in real space, use the fitvector key word in the usual plot method.

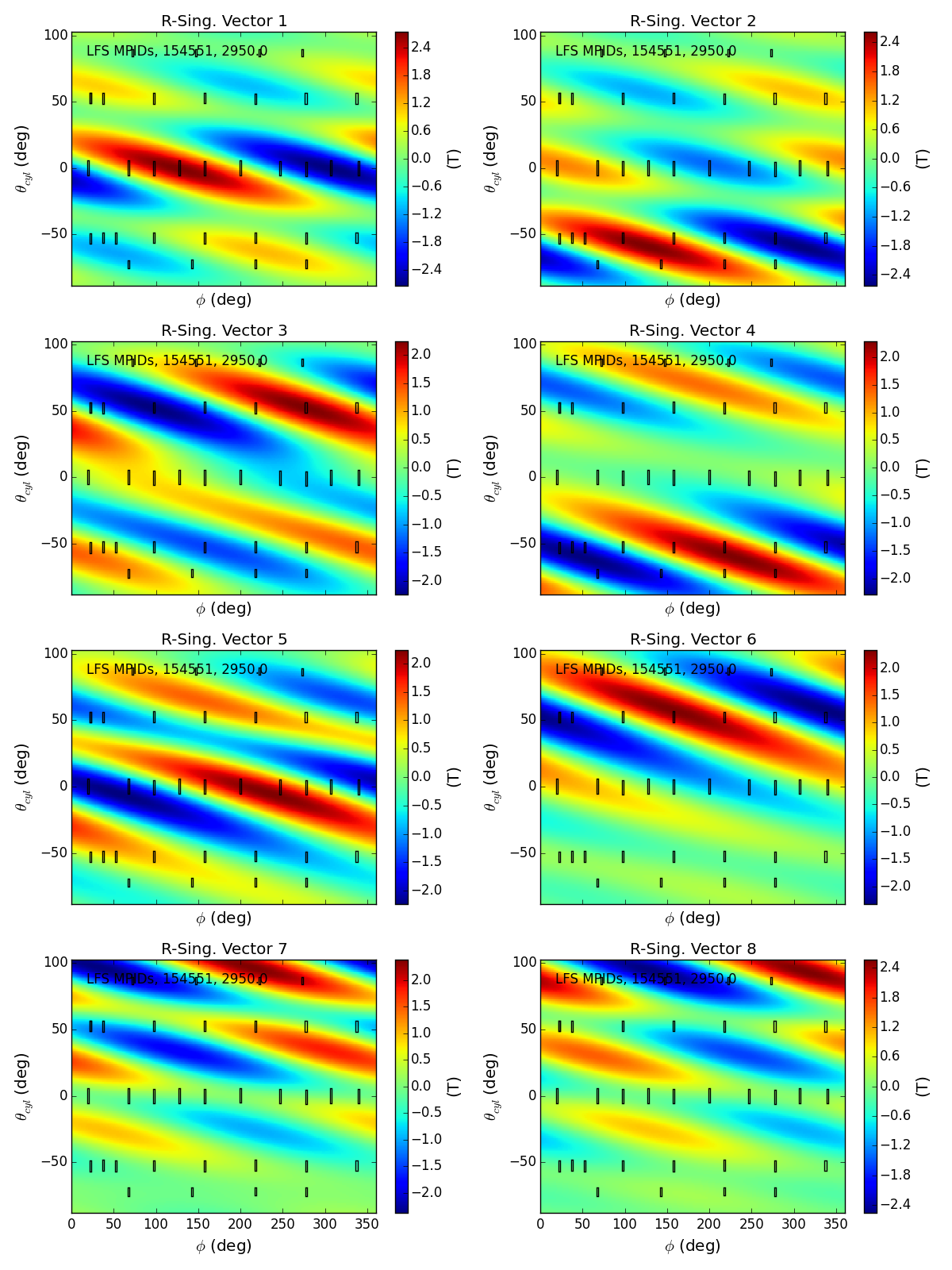

Lets look at some of the first complex modes in the eigen-space for the LFS MPIDs. The first 5 correspond to the 10 cos,sin modes used in our fit with effective rank 10. The higher ones correspond to combination of cos,sin modes that we deamed insufficiently constrrained to be used in our analysis.

>>> f,ax = plt.subplots(4,2,figsize=plt.rcp_size*[3,4])

>>> for i,a in enumerate(ax.ravel()):

... f = fit.plot(dim=3,axes=a,fitvector=i+1)[0]

... t = a.set_title('R-Sing. Vector {:}'.format(i+1))

... f = lfsbp.plot2d(color='k',fill=False,axes=a)

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

Scroll over axes to change time

Calling plot2d for sensors in LFS MPIDs array.

>>> f.savefig(__packagedir__+'/doc/examples/magnetics_basis_functions_2d.png')

Thats awsome! We can see how the first eigen-modes are consentrated in the areas with many sensors, and thus well constrained by the measurements. As the mode number increases the regions of large amplitude migrate to areas between sensors, and the modes excluded by the conditioning are obviously combinations of cos,sin modes that the sensors can barely see.

Bases: pyMagnetics.magdata.Data, object

2d surface magnetic sensors object with built in data retrieval, model retrieval, and visualization.

# RETURNS A NEW DATA CLASS INSTANCE

cdfput( self, # Write Data instance to netcdf file name = None, # File name, if none use self.yname path=’.’, # Directory path to file tfile = None, # If not None, add cdf file to this tarfile instance and delete original cdf file format = ‘NETCDF4’,

- # Form of netCDF file

- # netCDF files come in several flavors (‘NETCDF3_CLASSIC’, # ‘NETCDF3_64BIT’, ‘NETCDF4_CLASSIC’, and ‘NETCDF4’). The first two flavors # are supported by version 3 of the netCDF library. ‘NETCDF4_CLASSIC’ # files use the version 4 disk format (HDF5), but do not use any features # not found in the version 3 API. They can be read by netCDF 3 clients # only if they have been relinked against the netCDF 4 library. They can # also be read by HDF5 clients. ‘NETCDF4’ files use the version 4 disk # format (HDF5) and use the new features of the version 4 API. The # ‘netCDF4’ module can read and write files in any of these formats. When

quiet = False, # IF True, DON’T PRINT FILE NAME WRITTEN TO )

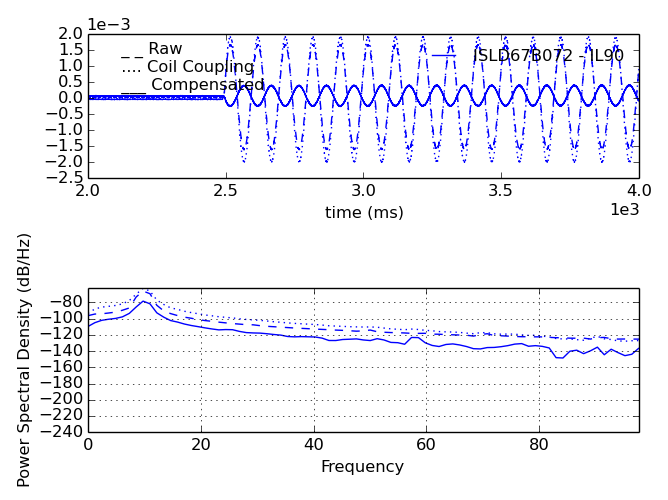

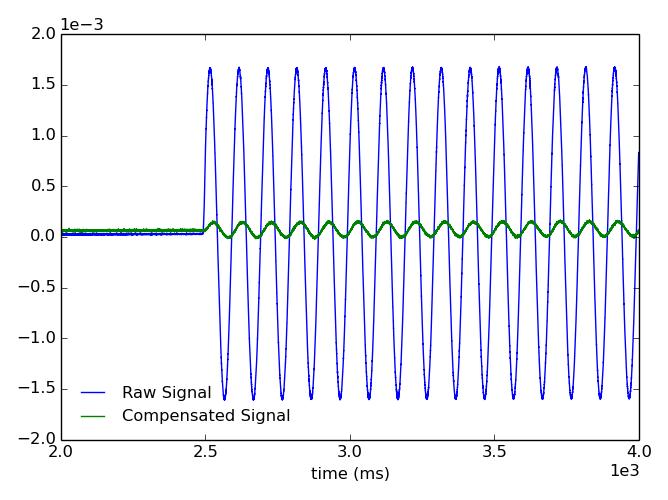

Attempt to compensate sensor data for vacuum coupling to 3D coils using pcs point names for coil currents.

Additional kwargs passed to response_function.compensate.

compress( # REMOVE VALUES FROM self (IN PLACE) WHERE CONDITION IS NOT SATISFIED. # CONDITION MUST HAVE THE SAME LENGTH AS THE AXIS ALONG WHICH COMPRESSION IS DONE. # IF CONDITION IS ‘unique’ MAKES AXIS UNIQUE VALUES (I.E. NO TWO X VALUES ARE THE SAME. # THE INSTANCE IS SORTED ALONG axis. self, condition, # LOGICAL CONDITION WITH LENGTH OF axis OR ‘unique’ TO COMPRESS OUT

# VALUES WITH THE SAME x (FIRST VALUE IS TAKEN)

axis = 0, # AXIS ALONG WHICH YOU WANT TO COMPRESS, F NOTATION, I.E. axis=0 IS self.x[0] )

conj( # COMPLEX CONJUGATE self, ) # RETURNS NEW Data INSTANCE, z, WITH z.y = conj(self.y)

contour( # GENERATE SERIES OF CONTOUR LINES FOR 2-D DATA self, vc = None, # SEQUENCE OF CONTOUR VALUES nc = 10, # IF vc IS None THEN nc=NUMBER OF CONTOURS BETWEEN min(self.y) and max(self.y) zmax = None, # IF NOT None VALUES OF self.y ABOVE zmax ARE IGNORED IN CONTOURING ) # RETURNS A NEW Data INSTANCE z WITH # z.y[ i, 0, : ] = X0 VALUES FOR THE i’TH CONTOUR # z.y[ i, 1, : ] = X1 VALUES FOR THE i’TH CONTOUR # z.x = [ INDEX ALONG CONTOUR, X INDEX , CONTOUR INDEX ] # z.ncont[i] = NUMBER OF POINTS IN i’TH CONTOUR # z.kcont[i] = 10* INDEX OF VC FOR THE i’TH CONTOUR + IFLAG WHERE # IFLAG=0 FOR A CLOSED CONTOUR AND IFLAG=1 FOR AN OPEN CONTOUR

Returns copy.copy of Sensor object.

copy( # COPY ALL ATTRIBUTES OF A Data INSTANCE, RETUNS A NEW INSTANCE # WARNING ! copy.copy IS USED SO THAT IN MOST CASES NEW REFERENCES # ARE CREATED RATHER THAN COPIES, THUS ANY MUTABLE ATTRIBUTES CHANGED # IN THE COPIED INSTANCE WILL BE CHANGED IN THE ORIGINAL INSTANCE. THIS # IS AVOIDED IN THE CASE OF THE x AND xerror LISTS BY DOING FULL COPIES # (I.E. MAKING CHANGES TO x IN THE COPIED INSTANCE WILL NOT CHANGE THE # ORIGINAL). NOTE ALSO THAT THE ATTRIBUTE t_domains AND ANY HIDDEN # I.E. BEGENNING WITH _ ARE NOT COPIED. self, )

Returns copy.deepcopy of Sensor object.

der( # FIRST DERIVATIVE ALONG AXIS USING CENTRAL DIFFERENCE self, axis = 0, # X AXIS INDEX TO TAKE DERIVATIVE ALONG ) # RETURNS: NEW Data CLASS INSTANCE, z, A COPY OF self WITH # WITH z.y = DERIVATIVE, z.x[axis] = self.x[axis][1:-1]

First derivative along axis using central difference derivative( variable = 0, The index of the variable of the function with

respect to which the X{derivative} is taken

) Returns: New InterpolatingFunction Class instance with

values = Derivative and axes[variable] = axes[variable][1:-1]

dump( # DUMP Data INSTANCE X,Y DATA TO COLUMNS IN AN ASCII TEXT FILE. # WORKS WITH ANY NUMBER OF Y DIMENSIONS self, dfile = None, # Data name in file: IF None USE self.yname.

# If form == ‘REVIEW’ full file name = shot#dfile.dat # If form != ‘REVIEW’ full file name = dfile_shot#.dat or # dfile.dat if append_shot==False

form = None, # IF form.upper() == ‘REVIEW’ USE REVIEW DATA FILE NAME AND HEADLINES append_shot = True, # IF True AND form != ‘REVIEW’, SHOT NUMBER IN SUFFIX headline = True, # IF True AND form != ‘REVIEW’, WRITE A HEADLINE WITH COLUMN NAMES auxdat = True, # IF True, DUMP yaux DATA AS ADDITIONAL COLUMNS tfile = None, # IF NOT None, ADD DUMP FILE TO THIS tarfile INSTANCE AND DELETE ORIGINAL ASCII FILE quiet = False, # IF True, DON’T PRINT FILE NAME WRITTEN TO )

fft( # FAST FOURIER TRANSFORM USING FFTW # ONLY WORKS FOR FIXED X-AXIS SPACING, USE .newx FIRST # IF YOU HAVE VARIABLE SPACING self, axis = 0, # AXIS ALONG WHICH TO TAKE FFT xmin = None, # MINIMUM X VALUE TO INCLUDE xmax = None, # MAXIMUM X VALUE TO INCLUDE assume_real = True, # IF TRUE RETURNS REAL FFT (HALF THE NUMBER OF POINTS) detrend = True, # IF TRUE A LINEAR LEAST SQUARES FIT IS SUBTRACTED FROM

# DATA ARRAY BEFORE FFT IS PERFORMED

quiet = 1 # IF 0 PRINT OUT EXTRA INFO ) # RETURNS: NEW Data INSTANCE, z, z.y = fft(self.y), z.x = FREQUENCY (1/self.x)

Uses scipy.signal.firwin to design a spectral filter and then applies it to signal. Assumes 1D data. Overrides nyq, using the first time step in data.x[0] to calculate the nyquist frequency. All frequency cutoffs are thus in kHz, and must be between 0 and the nyquist frequency.

Suggested values : numtaps=40

Arguments and Key word arguments are taken from scipy.signal.firwin. Documentation below:

FIR filter design using the window method.

This function computes the coefficients of a finite impulse response filter. The filter will have linear phase; it will be Type I if numtaps is odd and Type II if numtaps is even.

Type II filters always have zero response at the Nyquist rate, so a ValueError exception is raised if firwin is called with numtaps even and having a passband whose right end is at the Nyquist rate.

Set to True to scale the coefficients so that the frequency response is exactly unity at a certain frequency. That frequency is either:

scipy.signal.firwin2

Low-pass from 0 to f:

>> from scipy import signal

>> signal.firwin(numtaps, f)

Use a specific window function:

>> signal.firwin(numtaps, f, window='nuttall')

High-pass (‘stop’ from 0 to f):

>> signal.firwin(numtaps, f, pass_zero=False)

Band-pass:

>> signal.firwin(numtaps, [f1, f2], pass_zero=False)

Band-stop:

>> signal.firwin(numtaps, [f1, f2])

Multi-band (passbands are [0, f1], [f2, f3] and [f4, 1]):

>> signal.firwin(numtaps, [f1, f2, f3, f4])

Multi-band (passbands are [f1, f2] and [f3,f4]):

>> signal.firwin(numtaps, [f1, f2, f3, f4], pass_zero=False)

fit( # FIT TO A USER DEFINED OR STANDARD FUNCTION USING NONLINEAR LEAST SQUARES self, func_in, # FITTING FUNCTION DEFINED IN USER ROUTINE OR STRING TO SELECT FROM A PREDEFINED SET.

# IF DEFINED IN A USER ROUTINE ARGUMENTS MUST BE ( C, X, PARAM = NONE ) WHERE # C IS A SEQUENCE OF THE COEFFICIENTS TO BE FIT, # X IS A LIST OF MATRICIES WHERE, E.G. MATRIX 0 GIVES THE VALUE # OF self.x[0] AT ALL POINTS ON THE GRID WHERE self.y IS DEFINED (THESE # MATRICIES ARE SET UP AUTOMATICALLY WHEN .fit IS CALLED), # PARAM IS EXTRA PARAMETERS (ALLOWS ARTERING THE FUNCTION) # USER DEFINED FUNCTIONS CAN BE IN THE CALLING MODULE OR PUT IN A MODULE # CALLED extra_fit_functions.py WHICH IS AUTOMATICALLY IMPORTED ALLOWING # A STRING = FUNCTION NAME TO BE USED FOR func_in AS WITH OTHER PREDEFINED. # STANDARD PREDEFINED FUNCTIONS ARE ONE OF THE FOLLOWING, # (SEE data_fit_functions MODULE FOR MORE INFO) # POLY: 1-D POLYNOMIAL ON X[0], ORDER DETERMINED BY LEN(C0) # GAUSS:1-D GAUSSIAN ON X[0],C[0]EXP( (X-C[1])**2*C[2] ) # POWER:N-D POWER LAW; X[0]**C[0]*X[1]**C[1]...*C[N] # TSPLFUN:TENSIONED SPLINE # LINFUN:1-D TWO LINE FIT, C[0]=SYM,C[1]=PED,C[2]=SLOPEIN,C[3]=SLOPEOUT # TANH:1-D HYPERBOLIC TANGENT, C[0]=SYM,C[1]=WID,C[2]=PED,C[3]=OFF,C[4]=ALP # TANH_QUAD_QUAD:HYPERBOLIC TANGENT WITH QUADRATIC INNER AND OUTER EXTENSIONS # TANH_QUAD_LIN:HYPERBOLIC TANGENT WITH QUADRATIC INNER AND LINEAR OUTER EXTENSIONS # TANH_QUAD_CONST:HYPERBOLIC TANGENT WITH QUADRATIC INNER AND CONSTANT OUTER EXTENSIONS # TANH_0OUT: TANH WITH QUAD INNER AND 0 OUTER EXTENSIONS # TANH_MULTI:HYPERBOLIC TANGENT WITH INNER AND OUTER CONTROLLED ORDER CONTROLLED BY PARAM # TNH0:1-D HYPERBOLIC TANGENT, C[0]=SYM,C[1]=WID,C[2]=PED,C[3]=OFF,C[4]=ALP # TNH0_0OUT: TANH WITH LINEAR INNER AND 0 OUTER EXTENSIONS

c0, # STARTING VALUE FO FIT COEFFICIENTS param = None, # EXTRA PARAMETERS TO PASS TO FUNCTION.SHOULD BE NUMBER OR SEQUENCE OF NUMBERS

# THAT CAN BE CAST AS A NUMERIC ARRAY (FOR MDS+ STORAGE)

# PROBLEMS WITH WITH ord = FALSE FIND A SOLUTION WITH TRADITIONAL LEAST SQUARES MINIMUNIZATION # WITH WEIGHTS SET BY Y ERRORS ONLY USING scipy.optimize.leastsq WITH THE FOLLOWING PARAMETERS. # SEE scipy.optimize.leastsq DOCUMENTATION FOR MORE DETAILS ON THESE PARAMETERS ftol = 1.49012e-8, # CALC TERMINATION OCCURS WHEN BOTH THE ACTUAL AND PREDICTED RELATIVE

# REDUCTIONS IN THE SUM OF SQUARES ARE AT MOST ftol. THEREFORE, ftol MEASURES # THE RELATIVE ERROR DESIRED IN THE SUM OF SQUARES.

maxfev = 0, # THE CALC IS TERMINATED IF NUM OF CALLS TO func = maxfev, IF SET TO 0 USES 100*(len(c)+1) epsfcn = 0.0, # STEP FOR FORWARD-DIFFERENCE APPROX(IF DFUN=None), IF 0 DETERMINED BY MACHINE PRECISION factor = 100., # A PARAMETER DETERMINING THE INITIAL STEP BOUND 0.1<factor<100 diag = None, # A SEQUENCT OF len(c) USED AS SCALE FACTORS FOR c. CAN BE USED TO MAKE ALL c’s O(1).

# PROBLEMS ord = TRUE FIND A SOLUTION WITH ORTHOGONALDISTANCE REGRESSION # ALLOWING FOR ERRORS IN BOTH X AND Y USING scipy.optimize.odr WITH THE FOLLOWING PARAMETERS. # SEE scipy.optimize.odr DOCUMENTATION FOR MORE DETAILS ON THESE PARAMETERS sstol = None, # FLOAT SPECIFYING THE TOLERANCE FOR CONVERGENCE BASED ON THE RELATIVE

# CHANGE IN THE SUM-OF-SQUARES. THE DEFAULT VALUE IS EPS**(1/2) WHERE EPS # IS THE SMALLEST VALUE SUCH THAT 1 + EPS > 1 FOR DOUBLE PRECISION COMPUTATION ON THE MACHINE. # SSTOL MUST BE LESS THAN 1.

ndigit = None,# INTEGER SPECIFYING THE NUMBER OF RELIABLE DIGITS IN THE COMPUTATION OF THE FUNCTION. accept_questionable = False, # IF TRUE THEN ACCEPT CONVERGED BUT QUESTIONABLE RESULTS

) # RETURNS: A NEW Data INSTANCE WITH self.y REPLACED BY THE FITTED VALUES AND THE FOLLOWING EXTRA ATTRIBUTES # Additional attributes of z: # z.fit_coef = ARRAY OF FIT COEFFICIENTS # z.fit_coeferr = ARRAY OF ERRORS IN FIT COEFFICIENTS # z.fit_coefcov = COEFFICIENT COVARIANCE ARRAY, DIAGONAL IS coeferr**2 # z.fit_nu = NUMBER OF FITTING PARAMETERS # z.fit_chisq = CHISQ OF FIT # z.fit_ch2prob = PROBABILITY OF THIS CHISQ VALUE # z.fit_condnum = CONDITION NUMBER FOR FIT (AVAILABLE IN ODR ONLY) # z.fit_fjac = JACOBIAN (dy_i/d_coef_j) (AVAILABLE IN ODR ONLY) # z.yerror = ERROR BAR OF FIT = jac * coefcov * jac.T (AVAILABLE IN ODR ONLY) # z.fit_func = func_in # z.fit_param = FUNCTION CONTROL PARAMETERS (PARAM) (NOT INCLUDE IF PARAM=None) # z.__call__ (i.e. INSTANCE CAN BE CALLED AS A FUNCTION) z(x)= VALUE OF FIT AT X. # WITH MORE THAN ONE DIMENSION z(x0,x1,...). x VALUES CAN BE NUMBERS OR SEQUENCES. # RETURNED VALUE IS AN ARRAY WITH SHAPE = ( len(xn), len(xn-1), .. len(x0)), OR # A NUMBER IF ONLY ONE VALUE IS RETURNED. FOR z.nx=1: z() RETURNS ROOT NEAR LOCATION # OF min(abs(z.y)); z(x0,ider) RETURNS DERIVATIVE OF ORDER ider AT x0.

imag( # IMAGINARY PART self, ): # RETURNS NEW Data INSTANCE, z, WITH z.y = imag(self.y)

int( # INTEGRATE USING TRAPIZOID RULE self, axis = 0, # AXIS TO INTEGRATE ALONG ) # RETURNS A NEW Data INSTANCE, z, WITH z.y = integral(self.y)

Integrate using trapizoid rule int( variable = 0, The index of the variable of the function with

respect to which the X{derivative} is taken

) Returns: New InterpolatingFunction Class instance with values = Integral

interp_fun( # CREATE A CALL METHOD FOR THE INSTANCE WHICH IS AN INTERPOLATING # FUNCTION. THE INTERPOLATED VALUE IS RETURNED WHEN THE INSTANCE # IS CALLED AS A FUNCTION. ANY NUMBER OF DIMENSIONS IS SUPPORTED # FOR LINEAR INTERPOLATION. THE ORDER OF THE X VALUES IN MULTI # DIMENSIONS CORRESPONDS TO THE X INDEX (I.E. THE OPPOSITE OF THE # INDEX ORDER IN Y). self, func = None, # NAME OF INTERPOLTING FUNCTION TO USE.

# NOTE THAT interp_fun IS CALLED AUTOMATICALLY IN .spline() AND .fit() # SO YOU DON’T NEED TO CALL IT DIRECTLY IF YOU HAVE USED THESE METHODS. # None: LINEAR INTERPOLATION # ‘spline’: INTERPOLATING CUBIC SPLINE (ALLOWS DERIVATIVES) # other string or python function : ‘INTERPOLATE’ WITH FIT FUNCTION # EITHER THE NAME OF A STANDARD FIT FUNCTION OR THE ACTUAL DESIRED # FIT FUNCTION (SEE .fit() DOCUMENTATIONS ). NOTE THAT IN CONTRAST # TO THE FIT METHOD self.y IS NOT REPLACED BY THE FITTED VALUES SO # THAT THE ‘INTERPOLATED’ VALUE MAY NOT MATCH self.y AT THE SAME X.

c0 = None, # INITIAL COEFFICIENTS FOR .fit() WHEN DOING FIT THROUGH .interp_fun() param = None,# CONTROL PARAMETERS TO PASS TO FIT FUNCTION, (SEE .fit() DOCUMENTATION) default = 0.,# DEFAULT VALUE FOR LINEAR INTERPOLATION (OUTSIDE OF X RANGE) # # __call__ METHOD ARGUMENTS: self(args) # *args,# FOR self.nx == 1:

# IF len(args) == 0: # RETURN ROOT # FOR SPLINE INTERPOLATING FUNCTION ALL ROOTS IN X[0] RANGE ARE FOUND, # FOR OTHER FORMS ROOT NEAREST LOCATION WHERE abs(self.y) IS MINIMUM # IF len(args) == 1: # RETURNS VALUES AT LOCATION(S) args[0] (args[0] CAN BE A SEQUENCE OR NUMBER) # IF len(args) == 2 and args[1] >=0: # RETURNS DERIVATIVE OF ORDER args[1] AT LOCATION(S) args[0] # (args[0] CAN BE A SEQUENCE OR NUMBER) # IF len(args) == 3 AND args[1] == -1: # RETURNS DEFINITE INTEGERAL BETEEN args[0] and args[2] # (args[0],args[2] MUST BE NUMBERS) # FOR self.nx > 1: # x0, x1, x2, ... CORRESPONTING TO THE DIFFERENT X AXIS, # WHERE x CAN BE A NUMBER OR SEQUENCE. # RETURNS: AN ARRAY WITH SHAPE (len(xn), len(xn-1), ... len(x0)) # OR SINGLE NUMBER CORRESPONDING TO VALUES AT x0,x1,...

)

inv_fft( # INVERSE FAST FOURIER TRANSFORM USING FFTW. # ONLY WORKS FOR FIXED X-AXIS SPACING, USE .newx FIRST IF YOU HAVE VARIABLE SPACING. # IT IS ASSUMED THAT THE INPUT DATA IS A FULL FOURIER TRANSORM, # I.E. EXTENDING FROM FREQ = 0 TO THE NYQUIST FREQUENCY FOR ASSUME_REAL=’TRUE’ AND INCLUDING # THE NEGATIVE FREQUENCY DATA FOR POINTS ABOVE THE NYQUIST FREQUENCY. SLICING WITH XMIN # AND XMAX IS DONE ZEROING OUT THE DATA OUTSICE THE SLICE INTERVAL ( A BAND PASS FILTER). self, axis = 0, # AXIS ALONG WHICH TO TAKE FFT fmin = None, # MINIMUM f VALUE TO INCLUDE fmax = None, # MAXIMUM f VALUE TO INCLUDE assume_real = True, # IF TRUE ASSUME self IS A REAL FFT (HALF THE NUMBER OF POINTS)

# AND USE SYMMETRY PROPERTIES OF THE FFT OF A REAL ARRAY # TO CONSTRUCT THE FULL FFT BEFORE INVERTING

quiet = 1 # IF 0 PRINT OUT EXTRA INFO ) # RETURNS: NEW Data INSTANCE, z, z.y = inv_fft(self.y), z.x = TIME (1/self.x)

list( # LIST NAMES AND RANGES OF Data CLASS INSTANCE self, )

Returns natural logarithm of data.

..note: By including this, we enable the numpy function to be applied directly to a data object (i.e. np.log(density)).

mdsput( # # WRITE DATA INSTANCE TO MDS+ AS A SIGNAL NODE. # # IF THE INSTANCE HAS FIT OR SPLINE ATTRIBUTES :fitname AND :fitdoc ALONG WITH THE # OTHER FIT AND SPLINE ATTRIBUTES ARE ADDED AS SUBNODES. THESE ARE USED TO RECONSTRUCT # THE FIT OR SPLINE WHEN THE DATA IS READ FROM MDS+. # # ONE LAYER OF NUMERIC OR STRING SUBNODES IS ALLOWED, ANY NUMBER OF LAYERS OF Data # INSTANCE SUBNODES IS ALLOWED AND HANDELED BY RECURSION. Data TYPE SUBNODES CAN IN # TURN HAVE ONE LAYER OF NUMERIC OR STRING SUBNODES. # SUBNODES ARE NAMED AS ENTRY IN self.__dict__.keys, SUBNODES DO NOT HAVE TAGNAMES. # self, tree, # MDS+ TREE TO STORE DATA IN path = ‘’, # BRANCH OF MDS+ TREE (DIRECTORY PATH) name = None, # NAME TO USE FOR MAIN NODE, IF NONE THEN = self.yname shot = None, # SHOT NUMBER, IF NONE THEN = self.shot tagname = None, # IF NOT NONE ATTACH THIS MDS+ TAGNAME TO THE NODE create = 0, # 0: IF NODE EXISTS AND IS OF CORRECT TYPE TRY TO WRITE TO IT,

# IF NO EXISTING NODE OR INCORRECT TYPE CREATE IT # 1: CREATE NEW NODE, DELETE OLD ONE IF IT EXISTS

comment = None, # COMMENT TO ADD AS SUBNODE open_tree = 1, # OPEN TREE ON CALL AND CLOSE ON RETURN,

# SET TO 0 FOR MULTIPLE WRITES TO SAME TREE

quiet = 0, # 1: PRINT EXTRA STUFF )

newx( # CREATE A NEW X AXIS BY LINEAR INTERPOLATION self, xnew = None, # IF xnew IS None: USE MIN DX FOR NEW SPACING ALONG EACH AXIS

# IF self.nx == 1: # IF xnew = NUMBER USE DX = xnew FOR SPACING OF NEW AXIS # IF xnew = ARRAY USE THIS FOR THE NEW AXIS # IF self.nx > 1: # IF xnew != None xnew MUST BE A LIST WITH VALUES OR None FOR EACH AXIS # IF xnew[i] IS None: USE MIN DX[i] FOR NEW SPACING ALONG I’TH AXIS # IF xnew[i] = NUMBER != 0 USE DX[i] = xnew[i] FOR SPACING OF NEW AXIS # IF xnew[i] = 0, no CHANGE ON THIS AXIS # IF xnew[i] = ARRAY USE THIS FOR THE NEW AXIS # # NOTE: CHANGING AXIS FOR self.nx > 1 CAN BE TIME CONSUMING SINCE INTERPOLATION # IS DONE FOR ALL POINTS, I.E. len(xnew[0])*len(xnew[1])*...

) # Returns: new Data instance on xnew

newy( # CREATE A NEW INSTANCE BASED ON THE CALLBACK FUNCTION ASSOCIATED WITH self. # EITHER VALUES OR DERIVATIVES CAN BE RETURNED. # IF self HAS NO CALLBACK FUNCTION AN INTERPOLATING SPLINE IS SET UP self, *args # args[0:self.nx]: args[i] IS AN ARRAY OF THE iTH X VALUES. IF = NONE USE self.x[i]

# args[self.nx,2*self.nx] : DERIVATIVE ORDER FOR EACH AXIS, 0=VALUE, 1=FIRST DERIVATIVE, ...

) # RETURNS A NEW DATA INSTANCE # IF DOING A DERIVATIVE OR INTEGRAL OR THE INTERPOLATING FUNCTION WAS NOT DEFINED FOR self # CREATES A SPLINE INTERPOLATING CALLBACK FUNCTION, OTHERWISE USES self._interpolatingfunction

Plot data.Data class object in using customized matplotlib methods (see pypec.moplot).

Additional kwargs passed to matplotlib.Axes.plot (1D) or matplotlib.Axes.pcolormesh (2D) functions.

..note: Including the ‘marker’ key in kwargs uses matplotlib.Axes.errorbar for 1D plots when data has yerror data.

Displays the poloidal cross section of an ipec Data class as a line on r,z plot.

All kwargs passed to matplotlib.pyplot.plot

Shows an ‘unrolled’ surface in phi,theta space.

Additional kwargs passed to matplotlib.pyplot contour.

Shows an ‘unrolled’ surface in phi,theta space.

All kwargs passed to Axes3D.plot or Axes3D.plot_surface.

Plot Power Spectrum Density of data.Data class object using matplotlib methods (see pypec.moplot).

Additional kwargs passed to matplotlib.Axes.psd

real( # REAL PART self, ) # RETURNS NEW Data INSTANCE, z, WITH z.y = real(self.y)

rebuild( # RETURN AN INSTANCE OF self FOR SHOT (DEFAULT=self.shot) BUILT THE SAME # WAY AS self (I.E. SAME COMBINATION OF POINT NAMES AND PROCESSING). # IF A FUNCTION IS REQUIRED IN A METHOD IN self.build (SUCH AS A FUNCTION # PASSED TO SMOOTH) ITS NAME (AS A STRING) IS PASSED THROUGH *functions. # IF SEVERAL FUNCTIONS ARE REQUIRED THEY MUST BE GIVEN IN THE SAME ORDER AS # THEY ARE NEEDED IN self.build. THE FUNCTIONS MUST EXIST IN MODULE in_module self, shot = None, # NEW SHOT NUMBER TO BUILD INSTANCE ON in_module = “__main__”, # NAMESPACE WHERE OPTIONAL REQUIRED FUNCTIONS EXIST *functions # NAMES OF OPTIONAL FUNCTIONS ) # RETURNS: NEW INSTANCE BUILT AS self FOR NEW SHOT # IF self.build IS None OR AN EMPTY STRING RETURNS None

Subtracts a uniform base value from the signal data where the base is an avarage of the amplitude over a specified time period.

save( # SAVE TO A cPickle FILE self, sfile = None, # IF NONE FILE=yname+shot. .Data IS APPENDED )

Attempt to read data from mds.

Note

A 2 shot (current and last) history is kept internally and referenced for faster access to working data.

Additional kwargs passed to Data object initialization.

Interpolate model output to sensor surface and set_data using a time-independent data object.

Currently supports IPEC model brzphi output format (table headers must include r,z,imag(b_r),real(b_r),imag(b_z), and real(b_z)).

shape( # RETURN self.y.shape self, )

skip( nskip=0, axis=0 ): skip nskip points along axis

skipval( xskip=0., axis=0 ): skip deltax along axis

# SMOOTH DATA def smooth(

# SMOOTH INSTANCE WITH ARBITRAY KERNEL (RESPONCE FUNCTION) # SMOOTHING CAN BE DONE USING A SET OF PREDEFINED RESPONCE FUNCTIONS OR # USING AN INPUT RESPONCE FUNCTION. SMOOTHING CAN BE DONE ON A GIVEN AXIS # FOR DATA WITH MULTIPLE X AXES. self, xave = None, # AVERAGING INTERVAL (SEE FAVE) OR ARBITRARY ARGUMENT TO FAVE fave = None, # either a user input responce function or a string that selects

# from the following predefined responce functions and lag windows: # # triang : SYMETRIC TRIANGULAR RESPONCE: tave = 1/2 TOTAL INTERVAL # back : BACK AVERAGE: tave = AVERAGING INTERVAL # foward : FOWARD AVERAGE: tave = AVERAGING INTERVAL # center : CENTERED AVERAGE: tave = AVERAGING INTERVAL # rc : RC: tave = TIMECONSTANT # weiner : WEINER (OPTIMAL) FILTER. THIS IS SIMILAR TO A CENTERED # AVERAGE EXCEPT THAT THE NOISE LEVEL IS ESTIMATED BASED ON THE # ENTIRE INTERVAL AND DATA OUTSIDE THE NOSE LEVEL HAVE LESS # AVERAGING. HERE xave CAN BE A TWO ELEMENT LIST OR TUPLE WITH # THE SECOND ELEMENT BEING AN INPUT NOISE LEVEL (SIGMA NOT SIGMA**2) # (OTHERWISE THE NOISE LEVEL IS COMPUTED FROM THE SIGNAL). # median : MEDIAN FILTER # order : ORDER FILTER, NOTE THAT IN THIS CASE fav = [‘order’,order] WHERE # E.G. order=0.1 WILL GIVE THE LOWER 10 % VALUE # normal : NORMAL DISTRIBUTION, HERE xave = [ sigma, cutoff ] # WHERE K ~ EXP(-X**2/(2*sigma**2)), AND, cutoff = EXTEND KERNAL TO # X = +/- cutoff*sigma, sigma = 0.8493*FWHM. # If xave = sigma, cutoff is assumed = 2.5 . # trap : TRAPIZOID. xave=[tave0,tave1]: tave0 = bottom of trapizoid, tav1=top<tave0 # prob : PROBABILITY DISTRIBUTION, HERE xave = [tave, sigma, cutoff] # K ~ P( ( X + tave/2 )/sigma ) - P( ( X - tave/2 )/sigma ) AND # P(Z) = ( int(-inf to Z)(exp(-t**2/2)) )/sqrt(2pi). FOR SMALL sigma THIS IS # A BOXCAR OF WIDTH = tave. WINGS ARE ADDED AT FINITE sigma. # cutoff WHEN Z= tave/2 + cutoff*sigma (cutoff=2.5 GIVES K=0.6%) # If xave = [taue,sigma], cutoff is assumed = 2.5 . #—————————————————————— # IF fave IS A USER DEFINED FUNCTION IT MUST TAKE TWO ARGUMENTS: # fave(xave,dx) WHERE xave IS THE BY DEFAULT THE AVERAGING INTERVAL, HOWEVER # xave IS ONLY USED IN THE CALL TO fave AND THUS IT CAN BE ANY PYTHON # DATA STRUCTURE. dx IS THE X INTERVAL OF THE DATA WHICH IS PASSED IN AT RUN TIME. # fave SHOULD RETURN A 1-D ARRAY OF THE RESPONCE FUNCTION SAMPLED AT # dx INTERVALS, SYMMETRICALLY CENTERED ON THE DATA POINT. # #—————————————————————— # LAG WINDOWS: # IN ADDITION, THE SMOOTH FUNCTION IS USED TO IMPLEMENT LAG WINDOWS FOR THE # ESTIMATION OF POWER SPECTRA. THE FOLLOWING LAG WINDOWS ARE SUPPORTED USING # THE fave ARGUMENT: # Bartlett, Blackman, Hamming, Hanning, Parzen, and Square # NOTE THAT THESE ARE UNNORMALIZED KERNALS !!!!! #——————————————————————axis = 0, # X-AXIS FOR SMOOTHING IN THE CASE OF MULTIPLE X AXES correlation = False, # IF TRUE USE CORRELATION RATHER THAN CONVOLUTION, THIS REVERSES

# ASYMMETERICAL KERNELS

- use_fft = True, # SMOOTH BY CONVOLUTION OF FFTs OF SIGNAL AND KERNEL RATHER THAN DIRECT

- # CONVOLUTION. THIS IS OFTEN A FACTOR OF TEN FASTER. # FOR 1D DATA WITH fave in [‘triang’,’back’,’forward’,’center’,’rc’,’normal’], # AN ANALYTIC FORM FOR THE FFT OF THE SMOOTHING KERNEL IS SUPPLIED IN data_smooth_fft.py, # OR USER SUPPLIED ANALYTICAL FORMULA FOR THE FFT OF THE KERNEL SUPPLIED IN # user_smooth_fft.py (SEE data_smooth_fft.py). OTHERWISE A DISCREET KERNEL FFT IS USED.

quiet = True, ) # RETURNS: NEW SMOOTHED Data INSTANCE

sort( # SORT DATA ON ALL X AXES IN PLACE (self REPLACED BY SORTED VERSION) self, ) # self.t_domains IS DELEATED IF ANY SORTING IS ACTUALLY DONE

spline( # B-SPLINE OF POLYNOMIAL SEGMENTS WITH VARIABLE KNOTS OR FIXED KNOTS AND CONSTRAINTS # IF constriant IS NOT None ONLY 1-D IS ALLOWED, OTHERWISE 1-D AND 2-D. # AN INTERPOLATING SPLINE PASSING THROUGH ALL THE DATA IS PRODUCED BY DEFAULT. self, knots = None, # KNOT LOCATIONS. IF MORE THAN 1-D A LIST OF KNOT LOCATIONS

# IF None THEN AUTOKNOTING WITH NO CONSTRAINT (SEE s), # OTHERWISE A SEQUENCE OF EITHER BSPLINE OR ORDINARY TYPE KNOT # LOCATIONS (SEE knot_type). FOR 2-D IF ONE OF THE KNOT SETS IN THE # LIST IS None KNOTS ARE AUTOMATICALLY CHOSED AS FOR AN INTERPOLATING # SPLINE ALONG THAT AXIS.

quiet = 0, # PRINT RESULT SUMMARY, =1 DON’T PRINT SUMMARY ) # Returns a new Data instance, z, which is a copy of self except z.y = values of spline at self.x # Additional attributes of z: # z.spline_tck = [ knots, spline_coefficients, spline_order ] # z.spline_chisq = chisquared for the fit # z.spline_nu = number of fitting parameters # z.__call__ (i.e. instance can be called as a function) = z(x,ider) or z(x,y,iderx,idery) # where x=xarray, y=yarray,ider=derivative order: 0=value,1=first,etc. ider can be # omitted. For 1-D data z(x,-1,b) gives the integeral of z from x to b. Also for 1-D # data z() gives the roots. For 2-D when x and y are given separately a 2-D array of # values are returned taking axis as x,y, in this case x and y MUST be ordered. If # instead z.nx=2 and x is a Nx2 array, [ [x0,y0], [x1,y1],... ] and y is omitted then # in N values of z are returned and the points do not need to be ordered.

timing_domains( # COMPUTE TIMING DOMAINS (REGIONS WITH SAME POINT SPACING) FOR X INDEX = AXIS self, axis = 0, # AXIS ALONG WHICH TO GET TIMING DOMAINS ) # RETURNED VALUE: None, VALUE SET IN self.t_domains

tspline( # SPLINE WITH TENSION. CURRENTLY ONLY 1-D BUT 2-D COULD BE ADDED IF NEEDED. # METHOD ALLOWS PRODUCING: # 1) INTERPOLATING SPLINE AT A GIVEN TENSION VALUE (KNOTS AT ABSISSA VALUES AND PASSING THROUGH ALL DATA). # ALLOWS SETTING DERIVATIVE VALUES AT END POINTS # 2) SMOOTHING SPLINE AT A GIVEN TENSION BASED ON ERROR BARS AND SMOOTHING FACTOR s (KNOTS AT ABSISSA VALUES) # 3) FITTING SPLINE TO DATA WITH ERROR BARS (KNOTS SPECIFIED). DERIVATIVES AND/OR VALUES AT KNOT END POINTS CAN # BE SPECIFIED; TENSION CAN BE SPECIFIED OR FIT ALONG WITH Y VALUES AT KNOTS. self, knots = None, # IF None KNOTS ARE self.x[0], AND => INTERPOLATING OR SMOOTHING SPLINE,

# IF NOT None => FITTING SPLINE

y0 = None, # FIX Y VALUE AT FIRST KNOT TO y0 FOR FITTING SPLINE, IF None THEN FIT FIRST KNOT Y VALUE y1 = None, # FIX Y VALUE AT LAST KNOT TO y0 FOR FITTING SPLINE, IF None THEN FIT LAST KNOT Y VALUE yp0 = None, # FIX DERIVATIVE OF Y AT FIRST KNOT TO yp0, IF None THEN FLOAT. yp1 = None, # FIX DERIVATIVE OF Y AT LAST KNOT TO yp1, IF None THEN FLOAT. tension = 0.0, # THE TENSION FACTOR. THIS VALUE INDICATES THE DEGREE TO WHICH THE FIRST DERIVATIVE PART OF THE

# SMOOTHING FUNCTIONAL IS EMPHASIZED. IF tension IS NEARLY ZERO (E. G. .001) THE RESULTING CURVE IS # APPROXIMATELY A CUBIC SPLINE. IF tension IS LARGE (E. G. 50.) THE RESULTING CURVE IS NEARLY A # POLYGONAL LINE. FOR A FITTING SPLINE IF tension = None, tension IS FIT ALONG WITH THE VALUES AT THE KNOTS.

quiet = 0, # 0=PRINT RESULT SUMMARY, =1 DON’T PRINT SUMMARY ) # Returns a new Data instance, z, which is a copy of self except z.y = values of spline at self.x # Additional attributes of z: # z.tspl_coef = Array( [ x_knots, y_knots, y’‘_knots ] ) # z.tspl_tens = tension # z.tspl_chisq = chisquared for the fit # z.tspl_nu = number of fitting parameters # z.__call__ (i.e. instance can be called as a function) = z(x,ider) # where x=xarray, y=yarray,ider=derivative order(optional): 0=value,1=first # z(x,-1,b) gives the integeral of z from x to b(<=x_n).

uniquex( # Replace elements of self with the same x (independent variable) values # within a tolerence xtol with average values. # z.y = Sum(self.y/self.yerror**2)/Sum(1/self.yerror**2) # z.yerror = Sqrt(1/Sum(1/self.yerror**2)) # Sums are over values with the same (within tolerence) x values. # z has redundent x’s relaces by a single value self, xtol=1.e-8, # x values closer than xtol*max(x[1:]-x[:-1]) are considered the same ) # Returns a new data class instance with unique x values

Create a data object consisting of data a vs. data b.

Examples:

If you want the beam torque as a function of rotation

>>> rotation = Data("-1*'cerqrotct6'/(2*np.pi*1.69)",153268,yunits='kHz',quiet=0)

SUBNODES: ['label', 'multiplier', 'tag4d', 'units', 'variable']

x0(ms) cerqrotct6(km/s)

>>> tinj = -2.4*Data('bmstinj',153268,quiet=0).smooth(100)

t(ms) bmstinj( )

>>> t_v_rot = data_vs_data(tinj,rotation)

>>> t_v_rot.xunits

['kHz']

xslice( # RETURN A SLICE CORRESPONDING TO RANGES OF X VALUES. self, *xslices, # ANY NUMBER OF COMMA SEPARATED TUPLES OF LISTS OF THE FORM (index,x1,x2).

# WHERE index IS THE INDEX OF THE X AXIS, x1 IS THE STARTING VALUE # AND x2 IS THE ENDING VALUE. IF x2 IS OMITTED A SLICE AT A PARTICULAR # x1 VALUE IS RETURNED. TO START AT THE BEGINNING OR END AT THE END # USE None FOR x1 OR x2

) # RETURNS: NEW SLICED INSTANCE

Bases: object

Basic magnetic array class. Includes collection of sensor objects, mass manipulation and visualization of data, and a built-in ModeFit type object.

Addition and subtraction of arrays returns a new array with combined/reduced sensors dictionary attribute.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

- astype( self,

- # CHANGE THE NUMPY TYPE OF X,XERROR,Y,YERROR TO THE INPUT TYPE newdtype, # )

# RETURNS A NEW DATA CLASS INSTANCE

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

cdfput( self, # Write Data instance to netcdf file name = None, # File name, if none use self.yname path=’.’, # Directory path to file tfile = None, # If not None, add cdf file to this tarfile instance and delete original cdf file format = ‘NETCDF4’,

- # Form of netCDF file

- # netCDF files come in several flavors (‘NETCDF3_CLASSIC’, # ‘NETCDF3_64BIT’, ‘NETCDF4_CLASSIC’, and ‘NETCDF4’). The first two flavors # are supported by version 3 of the netCDF library. ‘NETCDF4_CLASSIC’ # files use the version 4 disk format (HDF5), but do not use any features # not found in the version 3 API. They can be read by netCDF 3 clients # only if they have been relinked against the netCDF 4 library. They can # also be read by HDF5 clients. ‘NETCDF4’ files use the version 4 disk # format (HDF5) and use the new features of the version 4 API. The # ‘netCDF4’ module can read and write files in any of these formats. When

- clobber= True, # If True, trying to write to an existing file will delete the old on,

- # otherwise an error will be raised

quiet = False, # IF True, DON’T PRINT FILE NAME WRITTEN TO )

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Attempt to compensate sensor data for vacuum coupling to 3D coils using pcs point names for coil currents.

- Key Word Arguments:

- iu : bool.

- Compensate for each individual upper I-coil coupling

- il : bool.

- Compensate for each individual lower I-coil coupling

- c : bool.

- Compensate for even C-coil pair couplings.

- fun_type: str.

- Choose ‘direct’, ‘response’ or ‘transfer’ functions.

- pair : bool.

- Use even coil pair coupling.

- display : bool. (figure.)

- Plot intermediate steps (to figure).

Additional kwargs passed to response_function.compensate.

- Returns:

- (figure).

- If display kwargs[‘display’] = True (figure), return figure.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

compress( # REMOVE VALUES FROM self (IN PLACE) WHERE CONDITION IS NOT SATISFIED. # CONDITION MUST HAVE THE SAME LENGTH AS THE AXIS ALONG WHICH COMPRESSION IS DONE. # IF CONDITION IS ‘unique’ MAKES AXIS UNIQUE VALUES (I.E. NO TWO X VALUES ARE THE SAME. # THE INSTANCE IS SORTED ALONG axis. self, condition, # LOGICAL CONDITION WITH LENGTH OF axis OR ‘unique’ TO COMPRESS OUT

# VALUES WITH THE SAME x (FIRST VALUE IS TAKEN)axis = 0, # AXIS ALONG WHICH YOU WANT TO COMPRESS, F NOTATION, I.E. axis=0 IS self.x[0] )

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

conj( # COMPLEX CONJUGATE self, ) # RETURNS NEW Data INSTANCE, z, WITH z.y = conj(self.y)

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

contour( # GENERATE SERIES OF CONTOUR LINES FOR 2-D DATA self, vc = None, # SEQUENCE OF CONTOUR VALUES nc = 10, # IF vc IS None THEN nc=NUMBER OF CONTOURS BETWEEN min(self.y) and max(self.y) zmax = None, # IF NOT None VALUES OF self.y ABOVE zmax ARE IGNORED IN CONTOURING ) # RETURNS A NEW Data INSTANCE z WITH # z.y[ i, 0, : ] = X0 VALUES FOR THE i’TH CONTOUR # z.y[ i, 1, : ] = X1 VALUES FOR THE i’TH CONTOUR # z.x = [ INDEX ALONG CONTOUR, X INDEX , CONTOUR INDEX ] # z.ncont[i] = NUMBER OF POINTS IN i’TH CONTOUR # z.kcont[i] = 10* INDEX OF VC FOR THE i’TH CONTOUR + IFLAG WHERE # IFLAG=0 FOR A CLOSED CONTOUR AND IFLAG=1 FOR AN OPEN CONTOUR

Return a copy of this SensorArray.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

copy( # COPY ALL ATTRIBUTES OF A Data INSTANCE, RETUNS A NEW INSTANCE # WARNING ! copy.copy IS USED SO THAT IN MOST CASES NEW REFERENCES # ARE CREATED RATHER THAN COPIES, THUS ANY MUTABLE ATTRIBUTES CHANGED # IN THE COPIED INSTANCE WILL BE CHANGED IN THE ORIGINAL INSTANCE. THIS # IS AVOIDED IN THE CASE OF THE x AND xerror LISTS BY DOING FULL COPIES # (I.E. MAKING CHANGES TO x IN THE COPIED INSTANCE WILL NOT CHANGE THE # ORIGINAL). NOTE ALSO THAT THE ATTRIBUTE t_domains AND ANY HIDDEN # I.E. BEGENNING WITH _ ARE NOT COPIED. self, )

Return a deepcopy of this SensorArray.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

der( # FIRST DERIVATIVE ALONG AXIS USING CENTRAL DIFFERENCE self, axis = 0, # X AXIS INDEX TO TAKE DERIVATIVE ALONG ) # RETURNS: NEW Data CLASS INSTANCE, z, A COPY OF self WITH # WITH z.y = DERIVATIVE, z.x[axis] = self.x[axis][1:-1]

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

First derivative along axis using central difference derivative( variable = 0, The index of the variable of the function with

respect to which the X{derivative} is taken) Returns: New InterpolatingFunction Class instance with

values = Derivative and axes[variable] = axes[variable][1:-1]

Disjoin other SensorArray object by removing any sensors in other from this SensorArrays’ dictionary.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

dump( # DUMP Data INSTANCE X,Y DATA TO COLUMNS IN AN ASCII TEXT FILE. # WORKS WITH ANY NUMBER OF Y DIMENSIONS self, dfile = None, # Data name in file: IF None USE self.yname.

# If form == ‘REVIEW’ full file name = shot#dfile.dat # If form != ‘REVIEW’ full file name = dfile_shot#.dat or # dfile.dat if append_shot==Falseform = None, # IF form.upper() == ‘REVIEW’ USE REVIEW DATA FILE NAME AND HEADLINES append_shot = True, # IF True AND form != ‘REVIEW’, SHOT NUMBER IN SUFFIX headline = True, # IF True AND form != ‘REVIEW’, WRITE A HEADLINE WITH COLUMN NAMES auxdat = True, # IF True, DUMP yaux DATA AS ADDITIONAL COLUMNS tfile = None, # IF NOT None, ADD DUMP FILE TO THIS tarfile INSTANCE AND DELETE ORIGINAL ASCII FILE quiet = False, # IF True, DON’T PRINT FILE NAME WRITTEN TO )

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

fft( # FAST FOURIER TRANSFORM USING FFTW # ONLY WORKS FOR FIXED X-AXIS SPACING, USE .newx FIRST # IF YOU HAVE VARIABLE SPACING self, axis = 0, # AXIS ALONG WHICH TO TAKE FFT xmin = None, # MINIMUM X VALUE TO INCLUDE xmax = None, # MAXIMUM X VALUE TO INCLUDE assume_real = True, # IF TRUE RETURNS REAL FFT (HALF THE NUMBER OF POINTS) detrend = True, # IF TRUE A LINEAR LEAST SQUARES FIT IS SUBTRACTED FROM

# DATA ARRAY BEFORE FFT IS PERFORMEDquiet = 1 # IF 0 PRINT OUT EXTRA INFO ) # RETURNS: NEW Data INSTANCE, z, z.y = fft(self.y), z.x = FREQUENCY (1/self.x)

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Uses scipy.signal.firwin to design a spectral filter and then applies it to signal. Assumes 1D data. Overrides nyq, using the first time step in data.x[0] to calculate the nyquist frequency. All frequency cutoffs are thus in kHz, and must be between 0 and the nyquist frequency.

Suggested values : numtaps=40

Arguments and Key word arguments are taken from scipy.signal.firwin. Documentation below:

FIR filter design using the window method.

This function computes the coefficients of a finite impulse response filter. The filter will have linear phase; it will be Type I if numtaps is odd and Type II if numtaps is even.

Type II filters always have zero response at the Nyquist rate, so a ValueError exception is raised if firwin is called with numtaps even and having a passband whose right end is at the Nyquist rate.

- numtaps : int

- Length of the filter (number of coefficients, i.e. the filter order + 1). numtaps must be even if a passband includes the Nyquist frequency.

- cutoff : float or 1D array_like

- Cutoff frequency of filter (expressed in the same units as nyq) OR an array of cutoff frequencies (that is, band edges). In the latter case, the frequencies in cutoff should be positive and monotonically increasing between 0 and nyq. The values 0 and nyq must not be included in cutoff.

- width : float or None

- If width is not None, then assume it is the approximate width of the transition region (expressed in the same units as nyq) for use in Kaiser FIR filter design. In this case, the window argument is ignored.

- window : string or tuple of string and parameter values

- Desired window to use. See scipy.signal.get_window for a list of windows and required parameters.

- pass_zero : bool

- If True, the gain at the frequency 0 (i.e. the “DC gain”) is 1. Otherwise the DC gain is 0.

- scale : bool

Set to True to scale the coefficients so that the frequency response is exactly unity at a certain frequency. That frequency is either:

- 0 (DC) if the first passband starts at 0 (i.e. pass_zero is True)

- nyq (the Nyquist rate) if the first passband ends at nyq (i.e the filter is a single band highpass filter); center of first passband otherwise

- nyq : float

- Nyquist frequency. Each frequency in cutoff must be between 0 and nyq.

- h : (numtaps,) ndarray

- Coefficients of length numtaps FIR filter.

- ValueError

- If any value in cutoff is less than or equal to 0 or greater than or equal to nyq, if the values in cutoff are not strictly monotonically increasing, or if numtaps is even but a passband includes the Nyquist frequency.

scipy.signal.firwin2

Low-pass from 0 to f:

>> from scipy import signal >> signal.firwin(numtaps, f)Use a specific window function:

>> signal.firwin(numtaps, f, window='nuttall')High-pass (‘stop’ from 0 to f):

>> signal.firwin(numtaps, f, pass_zero=False)Band-pass:

>> signal.firwin(numtaps, [f1, f2], pass_zero=False)Band-stop:

>> signal.firwin(numtaps, [f1, f2])Multi-band (passbands are [0, f1], [f2, f3] and [f4, 1]):

>> signal.firwin(numtaps, [f1, f2, f3, f4])Multi-band (passbands are [f1, f2] and [f3,f4]):

>> signal.firwin(numtaps, [f1, f2, f3, f4], pass_zero=False)

Fit amplitude and phase of sinusoidal basis functions corresponding to the specified mode numbers to a probe array.

Note that 2D basis functions use exp[i(n*phi-m*theta)] or exp(in*phi)*P^m(theta) convention.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

imag( # IMAGINARY PART self, ): # RETURNS NEW Data INSTANCE, z, WITH z.y = imag(self.y)

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

int( # INTEGRATE USING TRAPIZOID RULE self, axis = 0, # AXIS TO INTEGRATE ALONG ) # RETURNS A NEW Data INSTANCE, z, WITH z.y = integral(self.y)

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Integrate using trapizoid rule int( variable = 0, The index of the variable of the function with

respect to which the X{derivative} is taken) Returns: New InterpolatingFunction Class instance with values = Integral

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

interp_fun( # CREATE A CALL METHOD FOR THE INSTANCE WHICH IS AN INTERPOLATING # FUNCTION. THE INTERPOLATED VALUE IS RETURNED WHEN THE INSTANCE # IS CALLED AS A FUNCTION. ANY NUMBER OF DIMENSIONS IS SUPPORTED # FOR LINEAR INTERPOLATION. THE ORDER OF THE X VALUES IN MULTI # DIMENSIONS CORRESPONDS TO THE X INDEX (I.E. THE OPPOSITE OF THE # INDEX ORDER IN Y). self, func = None, # NAME OF INTERPOLTING FUNCTION TO USE.

# NOTE THAT interp_fun IS CALLED AUTOMATICALLY IN .spline() AND .fit() # SO YOU DON’T NEED TO CALL IT DIRECTLY IF YOU HAVE USED THESE METHODS. # None: LINEAR INTERPOLATION # ‘spline’: INTERPOLATING CUBIC SPLINE (ALLOWS DERIVATIVES) # other string or python function : ‘INTERPOLATE’ WITH FIT FUNCTION # EITHER THE NAME OF A STANDARD FIT FUNCTION OR THE ACTUAL DESIRED # FIT FUNCTION (SEE .fit() DOCUMENTATIONS ). NOTE THAT IN CONTRAST # TO THE FIT METHOD self.y IS NOT REPLACED BY THE FITTED VALUES SO # THAT THE ‘INTERPOLATED’ VALUE MAY NOT MATCH self.y AT THE SAME X.c0 = None, # INITIAL COEFFICIENTS FOR .fit() WHEN DOING FIT THROUGH .interp_fun() param = None,# CONTROL PARAMETERS TO PASS TO FIT FUNCTION, (SEE .fit() DOCUMENTATION) default = 0.,# DEFAULT VALUE FOR LINEAR INTERPOLATION (OUTSIDE OF X RANGE) # # __call__ METHOD ARGUMENTS: self(args) # *args,# FOR self.nx == 1:

# IF len(args) == 0: # RETURN ROOT # FOR SPLINE INTERPOLATING FUNCTION ALL ROOTS IN X[0] RANGE ARE FOUND, # FOR OTHER FORMS ROOT NEAREST LOCATION WHERE abs(self.y) IS MINIMUM # IF len(args) == 1: # RETURNS VALUES AT LOCATION(S) args[0] (args[0] CAN BE A SEQUENCE OR NUMBER) # IF len(args) == 2 and args[1] >=0: # RETURNS DERIVATIVE OF ORDER args[1] AT LOCATION(S) args[0] # (args[0] CAN BE A SEQUENCE OR NUMBER) # IF len(args) == 3 AND args[1] == -1: # RETURNS DEFINITE INTEGERAL BETEEN args[0] and args[2] # (args[0],args[2] MUST BE NUMBERS) # FOR self.nx > 1: # x0, x1, x2, ... CORRESPONTING TO THE DIFFERENT X AXIS, # WHERE x CAN BE A NUMBER OR SEQUENCE. # RETURNS: AN ARRAY WITH SHAPE (len(xn), len(xn-1), ... len(x0)) # OR SINGLE NUMBER CORRESPONDING TO VALUES AT x0,x1,...)

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

inv_fft( # INVERSE FAST FOURIER TRANSFORM USING FFTW. # ONLY WORKS FOR FIXED X-AXIS SPACING, USE .newx FIRST IF YOU HAVE VARIABLE SPACING. # IT IS ASSUMED THAT THE INPUT DATA IS A FULL FOURIER TRANSORM, # I.E. EXTENDING FROM FREQ = 0 TO THE NYQUIST FREQUENCY FOR ASSUME_REAL=’TRUE’ AND INCLUDING # THE NEGATIVE FREQUENCY DATA FOR POINTS ABOVE THE NYQUIST FREQUENCY. SLICING WITH XMIN # AND XMAX IS DONE ZEROING OUT THE DATA OUTSICE THE SLICE INTERVAL ( A BAND PASS FILTER). self, axis = 0, # AXIS ALONG WHICH TO TAKE FFT fmin = None, # MINIMUM f VALUE TO INCLUDE fmax = None, # MAXIMUM f VALUE TO INCLUDE assume_real = True, # IF TRUE ASSUME self IS A REAL FFT (HALF THE NUMBER OF POINTS)

# AND USE SYMMETRY PROPERTIES OF THE FFT OF A REAL ARRAY # TO CONSTRUCT THE FULL FFT BEFORE INVERTINGquiet = 1 # IF 0 PRINT OUT EXTRA INFO ) # RETURNS: NEW Data INSTANCE, z, z.y = inv_fft(self.y), z.x = TIME (1/self.x)

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

list( # LIST NAMES AND RANGES OF Data CLASS INSTANCE self, )

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Returns natural logarithm of data.

..note: By including this, we enable the numpy function to be applied directly to a data object (i.e. np.log(density)).

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

mdsput( # # WRITE DATA INSTANCE TO MDS+ AS A SIGNAL NODE. # # IF THE INSTANCE HAS FIT OR SPLINE ATTRIBUTES :fitname AND :fitdoc ALONG WITH THE # OTHER FIT AND SPLINE ATTRIBUTES ARE ADDED AS SUBNODES. THESE ARE USED TO RECONSTRUCT # THE FIT OR SPLINE WHEN THE DATA IS READ FROM MDS+. # # ONE LAYER OF NUMERIC OR STRING SUBNODES IS ALLOWED, ANY NUMBER OF LAYERS OF Data # INSTANCE SUBNODES IS ALLOWED AND HANDELED BY RECURSION. Data TYPE SUBNODES CAN IN # TURN HAVE ONE LAYER OF NUMERIC OR STRING SUBNODES. # SUBNODES ARE NAMED AS ENTRY IN self.__dict__.keys, SUBNODES DO NOT HAVE TAGNAMES. # self, tree, # MDS+ TREE TO STORE DATA IN path = ‘’, # BRANCH OF MDS+ TREE (DIRECTORY PATH) name = None, # NAME TO USE FOR MAIN NODE, IF NONE THEN = self.yname shot = None, # SHOT NUMBER, IF NONE THEN = self.shot tagname = None, # IF NOT NONE ATTACH THIS MDS+ TAGNAME TO THE NODE create = 0, # 0: IF NODE EXISTS AND IS OF CORRECT TYPE TRY TO WRITE TO IT,

# IF NO EXISTING NODE OR INCORRECT TYPE CREATE IT # 1: CREATE NEW NODE, DELETE OLD ONE IF IT EXISTS

- create_tree = 1, # 1: IF MDS+ TREE EXISTS DO NOTHING, ELSE CREATE NEW EMPTY TREE

- # -1: IF MDS+ TREE EXISTS DO NOTHING, ELSE CREATE FROM MODEL TREE # 0: RAISE ERROR IF TREE DOES NOT EXIST

comment = None, # COMMENT TO ADD AS SUBNODE open_tree = 1, # OPEN TREE ON CALL AND CLOSE ON RETURN,

# SET TO 0 FOR MULTIPLE WRITES TO SAME TREEquiet = 0, # 1: PRINT EXTRA STUFF )

Merge other SensorArray object by filling the sensors dictionary with any sensors existing in other but not in original array.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

newx( # CREATE A NEW X AXIS BY LINEAR INTERPOLATION self, xnew = None, # IF xnew IS None: USE MIN DX FOR NEW SPACING ALONG EACH AXIS

# IF self.nx == 1: # IF xnew = NUMBER USE DX = xnew FOR SPACING OF NEW AXIS # IF xnew = ARRAY USE THIS FOR THE NEW AXIS # IF self.nx > 1: # IF xnew != None xnew MUST BE A LIST WITH VALUES OR None FOR EACH AXIS # IF xnew[i] IS None: USE MIN DX[i] FOR NEW SPACING ALONG I’TH AXIS # IF xnew[i] = NUMBER != 0 USE DX[i] = xnew[i] FOR SPACING OF NEW AXIS # IF xnew[i] = 0, no CHANGE ON THIS AXIS # IF xnew[i] = ARRAY USE THIS FOR THE NEW AXIS # # NOTE: CHANGING AXIS FOR self.nx > 1 CAN BE TIME CONSUMING SINCE INTERPOLATION # IS DONE FOR ALL POINTS, I.E. len(xnew[0])*len(xnew[1])*...) # Returns: new Data instance on xnew

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

newy( # CREATE A NEW INSTANCE BASED ON THE CALLBACK FUNCTION ASSOCIATED WITH self. # EITHER VALUES OR DERIVATIVES CAN BE RETURNED. # IF self HAS NO CALLBACK FUNCTION AN INTERPOLATING SPLINE IS SET UP self, *args # args[0:self.nx]: args[i] IS AN ARRAY OF THE iTH X VALUES. IF = NONE USE self.x[i]

# args[self.nx,2*self.nx] : DERIVATIVE ORDER FOR EACH AXIS, 0=VALUE, 1=FIRST DERIVATIVE, ...) # RETURNS A NEW DATA INSTANCE # IF DOING A DERIVATIVE OR INTEGRAL OR THE INTERPOLATING FUNCTION WAS NOT DEFINED FOR self # CREATES A SPLINE INTERPOLATING CALLBACK FUNCTION, OTHERWISE USES self._interpolatingfunction

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Plot data.Data class object in using customized matplotlib methods (see pypec.moplot).

- Arguments:

- d : class.

- Data oject from data.Data.

- Key Word Arguments:

- psd : bool

- Plot Power Spectrum Density (1D only).

- xname : str.

- Axis for 1D plot of 2D data.

- x2range : float or tuple.

- Effects 1D plots of 2D data. Float plots closest slice, tuple plots all slices within (min,max) bounds.

- fill : bool.

- Use combination of plot and fill_between to show error in 1D plots if yerror data available. False uses errorbar function if yerror data available.

- fillkwargs : dict.

- Key word arguments passed to matplotlib fill_between function when plotting error bars. Specifically, alpha sets the opacity of the fill.

- Returns:

- Figure.

Additional kwargs passed to matplotlib.Axes.plot (1D) or matplotlib.Axes.pcolormesh (2D) functions.

..note: Including the ‘marker’ key in kwargs uses matplotlib.Axes.errorbar for 1D plots when data has yerror data.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Displays the poloidal cross section of an ipec Data class as a line on r,z plot.

All kwargs passed to matplotlib.pyplot.plot

- Returns:

- figure.

- Poloidal cross sections of the vessel

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Shows an ‘unrolled’ surface in phi,theta space.

- Key Word Arguments:

- geom : str. Choose from:

- ‘cyl’ -> atan(z/r)

- ‘flat’ -> z (m->k_z is dimensional)

- ‘sphere’-> atan(z/R)

Additional kwargs passed to matplotlib.pyplot contour.

- Returns:

- figure.

- Poloidal cross sections of the vessel

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Shows an ‘unrolled’ surface in phi,theta space.

All kwargs passed to Axes3D.plot or Axes3D.plot_surface.

- Returns:

- figure.

Display the magnetic sensor array.

Additional kwargs are passed to pyplot plot, Rectangle, or plot_surface as keyword arguments.

Display the magnetic sensor array.

Additional kwargs passed to pyplot plot, Rectangle, or plot_surface as keyword arguments.

Print probe information to text file.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Plot Power Spectrum Density of data.Data class object using matplotlib methods (see pypec.moplot).

- Arguments:

- d : class.

- Data oject from data.Data.

- Returns:

- Figure.

Additional kwargs passed to matplotlib.Axes.psd

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

real( # REAL PART self, ) # RETURNS NEW Data INSTANCE, z, WITH z.y = real(self.y)

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

rebuild( # RETURN AN INSTANCE OF self FOR SHOT (DEFAULT=self.shot) BUILT THE SAME # WAY AS self (I.E. SAME COMBINATION OF POINT NAMES AND PROCESSING). # IF A FUNCTION IS REQUIRED IN A METHOD IN self.build (SUCH AS A FUNCTION # PASSED TO SMOOTH) ITS NAME (AS A STRING) IS PASSED THROUGH *functions. # IF SEVERAL FUNCTIONS ARE REQUIRED THEY MUST BE GIVEN IN THE SAME ORDER AS # THEY ARE NEEDED IN self.build. THE FUNCTIONS MUST EXIST IN MODULE in_module self, shot = None, # NEW SHOT NUMBER TO BUILD INSTANCE ON in_module = “__main__”, # NAMESPACE WHERE OPTIONAL REQUIRED FUNCTIONS EXIST *functions # NAMES OF OPTIONAL FUNCTIONS ) # RETURNS: NEW INSTANCE BUILT AS self FOR NEW SHOT # IF self.build IS None OR AN EMPTY STRING RETURNS None

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Subtracts a uniform base value from the signal data where the base is an avarage of the amplitude over a specified time period.

- Arguments:

- data : instance. data module Data() class for the desired sensor.

- xmin : Float. Start of the period over which the amplitude is averaged.

- xmax : Float. End of the time period over which the amplitude is averaged.

- Key Word Arguments:

- slope : bool. Remove linear offset calculated from range as well as a constant offset.

- axis : int. Axis over which the base range is taken.

- Returns:

- instance. data module Data() class with base subtracted from y attribute.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

save( # SAVE TO A cPickle FILE self, sfile = None, # IF NONE FILE=yname+shot. .Data IS APPENDED )

Save array in pickled format. Recomended file extension in .pkl

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Attempt to read data from mds.

Note

A 2 shot (current and last) history is kept internally and referenced for faster access to working data.

- Arguments:

- shot : int.

- Valid DIII-D 6 digit shot number.

- Key Word Arguments:

- ptdata : bool.

- Map pointnames to older ptdata pointnames and prioritize ptdata over mdsplus (ignoring errors, subnodes, etc.).

- force_data : obj.

- Overide ptdata or mdsplus data base in favor of explicit Data object.

Additional kwargs passed to Data object initialization.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

Interpolate model output to sensor surface and set_data using a time-independent data object.

Currently supports IPEC model brzphi output format (table headers must include r,z,imag(b_r),real(b_r),imag(b_z), and real(b_z)).

- Arguments:

- modeldata : obj.

- A model data object (see model.read).

- Key Word Arguments:

- model_type : str.

- Choose from ‘IPEC’.

- dphi : float.

- Shift toroidal phase (rad).

- Returns:

- bool.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

shape( # RETURN self.y.shape self, )

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

skip( nskip=0, axis=0 ): skip nskip points along axis

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

skipval( xskip=0., axis=0 ): skip deltax along axis

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

# SMOOTH DATA def smooth(

# SMOOTH INSTANCE WITH ARBITRAY KERNEL (RESPONCE FUNCTION) # SMOOTHING CAN BE DONE USING A SET OF PREDEFINED RESPONCE FUNCTIONS OR # USING AN INPUT RESPONCE FUNCTION. SMOOTHING CAN BE DONE ON A GIVEN AXIS # FOR DATA WITH MULTIPLE X AXES. self, xave = None, # AVERAGING INTERVAL (SEE FAVE) OR ARBITRARY ARGUMENT TO FAVE fave = None, # either a user input responce function or a string that selects

# from the following predefined responce functions and lag windows: # # triang : SYMETRIC TRIANGULAR RESPONCE: tave = 1/2 TOTAL INTERVAL # back : BACK AVERAGE: tave = AVERAGING INTERVAL # foward : FOWARD AVERAGE: tave = AVERAGING INTERVAL # center : CENTERED AVERAGE: tave = AVERAGING INTERVAL # rc : RC: tave = TIMECONSTANT # weiner : WEINER (OPTIMAL) FILTER. THIS IS SIMILAR TO A CENTERED # AVERAGE EXCEPT THAT THE NOISE LEVEL IS ESTIMATED BASED ON THE # ENTIRE INTERVAL AND DATA OUTSIDE THE NOSE LEVEL HAVE LESS # AVERAGING. HERE xave CAN BE A TWO ELEMENT LIST OR TUPLE WITH # THE SECOND ELEMENT BEING AN INPUT NOISE LEVEL (SIGMA NOT SIGMA**2) # (OTHERWISE THE NOISE LEVEL IS COMPUTED FROM THE SIGNAL). # median : MEDIAN FILTER # order : ORDER FILTER, NOTE THAT IN THIS CASE fav = [‘order’,order] WHERE # E.G. order=0.1 WILL GIVE THE LOWER 10 % VALUE # normal : NORMAL DISTRIBUTION, HERE xave = [ sigma, cutoff ] # WHERE K ~ EXP(-X**2/(2*sigma**2)), AND, cutoff = EXTEND KERNAL TO # X = +/- cutoff*sigma, sigma = 0.8493*FWHM. # If xave = sigma, cutoff is assumed = 2.5 . # trap : TRAPIZOID. xave=[tave0,tave1]: tave0 = bottom of trapizoid, tav1=top<tave0 # prob : PROBABILITY DISTRIBUTION, HERE xave = [tave, sigma, cutoff] # K ~ P( ( X + tave/2 )/sigma ) - P( ( X - tave/2 )/sigma ) AND # P(Z) = ( int(-inf to Z)(exp(-t**2/2)) )/sqrt(2pi). FOR SMALL sigma THIS IS # A BOXCAR OF WIDTH = tave. WINGS ARE ADDED AT FINITE sigma. # cutoff WHEN Z= tave/2 + cutoff*sigma (cutoff=2.5 GIVES K=0.6%) # If xave = [taue,sigma], cutoff is assumed = 2.5 . #—————————————————————— # IF fave IS A USER DEFINED FUNCTION IT MUST TAKE TWO ARGUMENTS: # fave(xave,dx) WHERE xave IS THE BY DEFAULT THE AVERAGING INTERVAL, HOWEVER # xave IS ONLY USED IN THE CALL TO fave AND THUS IT CAN BE ANY PYTHON # DATA STRUCTURE. dx IS THE X INTERVAL OF THE DATA WHICH IS PASSED IN AT RUN TIME. # fave SHOULD RETURN A 1-D ARRAY OF THE RESPONCE FUNCTION SAMPLED AT # dx INTERVALS, SYMMETRICALLY CENTERED ON THE DATA POINT. # #—————————————————————— # LAG WINDOWS: # IN ADDITION, THE SMOOTH FUNCTION IS USED TO IMPLEMENT LAG WINDOWS FOR THE # ESTIMATION OF POWER SPECTRA. THE FOLLOWING LAG WINDOWS ARE SUPPORTED USING # THE fave ARGUMENT: # Bartlett, Blackman, Hamming, Hanning, Parzen, and Square # NOTE THAT THESE ARE UNNORMALIZED KERNALS !!!!! #——————————————————————axis = 0, # X-AXIS FOR SMOOTHING IN THE CASE OF MULTIPLE X AXES correlation = False, # IF TRUE USE CORRELATION RATHER THAN CONVOLUTION, THIS REVERSES

# ASYMMETERICAL KERNELS

- use_fft = True, # SMOOTH BY CONVOLUTION OF FFTs OF SIGNAL AND KERNEL RATHER THAN DIRECT

- # CONVOLUTION. THIS IS OFTEN A FACTOR OF TEN FASTER. # FOR 1D DATA WITH fave in [‘triang’,’back’,’forward’,’center’,’rc’,’normal’], # AN ANALYTIC FORM FOR THE FFT OF THE SMOOTHING KERNEL IS SUPPLIED IN data_smooth_fft.py, # OR USER SUPPLIED ANALYTICAL FORMULA FOR THE FFT OF THE KERNEL SUPPLIED IN # user_smooth_fft.py (SEE data_smooth_fft.py). OTHERWISE A DISCREET KERNEL FFT IS USED.

quiet = True, ) # RETURNS: NEW SMOOTHED Data INSTANCE

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

sort( # SORT DATA ON ALL X AXES IN PLACE (self REPLACED BY SORTED VERSION) self, ) # self.t_domains IS DELEATED IF ANY SORTING IS ACTUALLY DONE

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

spline( # B-SPLINE OF POLYNOMIAL SEGMENTS WITH VARIABLE KNOTS OR FIXED KNOTS AND CONSTRAINTS # IF constriant IS NOT None ONLY 1-D IS ALLOWED, OTHERWISE 1-D AND 2-D. # AN INTERPOLATING SPLINE PASSING THROUGH ALL THE DATA IS PRODUCED BY DEFAULT. self, knots = None, # KNOT LOCATIONS. IF MORE THAN 1-D A LIST OF KNOT LOCATIONS

# IF None THEN AUTOKNOTING WITH NO CONSTRAINT (SEE s), # OTHERWISE A SEQUENCE OF EITHER BSPLINE OR ORDINARY TYPE KNOT # LOCATIONS (SEE knot_type). FOR 2-D IF ONE OF THE KNOT SETS IN THE # LIST IS None KNOTS ARE AUTOMATICALLY CHOSED AS FOR AN INTERPOLATING # SPLINE ALONG THAT AXIS.

- s = 0., # SMOOTHING VALUE = CHISQ (INCLUDING ERRORS IF yerror IS NOT None) OF THE

- # SPLINE FIT TO THE DATA USED IN AUTOKNOTING (NUMBER AND LOCATION OF # KNOTS BOTH DETERMINED). IGNORED IF knots IS NOT None. TO PRODUCE # A SPLINE INTERPOLATION (I.E. PASSING THROUGH EACH DATA POINT) SET # s=0, knots=None, AND constraint=None (DEFAULT)

- constraint = None, # SPLINE CONSTRAINTS. REQUIRES 1-D AND FIXED KNOTS.

- # [ [x0, x1, x2,...], [v0, v1, v2,...], [ k0, k1, k2,...] ] # WHERE k = type + 4*nderiv, AND nderiv = ORDER OF DERIVATIVE AT CONSTRIANT = # -1 = IGNORE, 0 = VALUE, 1 = FIRST, 2= SECOND, ... , AND # type = 0: nderiv(y) at x <= v # type = 1: nderiv(y) at x >= v # type = 2: nderiv(y) at x == v # type = 3: nderiv(y) at x == nderiv(y) at v

- order = 3, # POLYNOMIAL ORDER OF SPLINE SEGMENTS, = 3 FOR CUBIC SPLINES.

- # FOR MORE THAN 1-D A LIST MAY BE ENTERED OTHERWISE ASSUME # ALL DIMENSIONS USE THE SAME ORDER. # AUTO KNOT MUST HAVE order<=5. # FIXED KNOT 1-D WITH CONSTRANT order <=19.

- knot_type = ‘s’, # WHEN ENTERING KNOTS, SIMPLE KNOTS USE ‘s’. IN THIS CASE EXTRA KNOTS

- # ARE ADDED TO THE KNOT LIST TO COMPLY WITH B-SPLINE REQUIREMENTS. IF # KNOT_TYPE = ‘b’ NO EXTRA KNOTS ARE ADDED AND KNOTS MUST COMPLY WITH B-SPLINE # REQUIREMENTS. knot_type CAN BE A LIST FOR MORE THAN 1-D SPLINES

quiet = 0, # PRINT RESULT SUMMARY, =1 DON’T PRINT SUMMARY ) # Returns a new Data instance, z, which is a copy of self except z.y = values of spline at self.x # Additional attributes of z: # z.spline_tck = [ knots, spline_coefficients, spline_order ] # z.spline_chisq = chisquared for the fit # z.spline_nu = number of fitting parameters # z.__call__ (i.e. instance can be called as a function) = z(x,ider) or z(x,y,iderx,idery) # where x=xarray, y=yarray,ider=derivative order: 0=value,1=first,etc. ider can be # omitted. For 1-D data z(x,-1,b) gives the integeral of z from x to b. Also for 1-D # data z() gives the roots. For 2-D when x and y are given separately a 2-D array of # values are returned taking axis as x,y, in this case x and y MUST be ordered. If # instead z.nx=2 and x is a Nx2 array, [ [x0,y0], [x1,y1],... ] and y is omitted then # in N values of z are returned and the points do not need to be ordered.

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

timing_domains( # COMPUTE TIMING DOMAINS (REGIONS WITH SAME POINT SPACING) FOR X INDEX = AXIS self, axis = 0, # AXIS ALONG WHICH TO GET TIMING DOMAINS ) # RETURNED VALUE: None, VALUE SET IN self.t_domains

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.

SENSOR DOCUMENTATION

tspline( # SPLINE WITH TENSION. CURRENTLY ONLY 1-D BUT 2-D COULD BE ADDED IF NEEDED. # METHOD ALLOWS PRODUCING: # 1) INTERPOLATING SPLINE AT A GIVEN TENSION VALUE (KNOTS AT ABSISSA VALUES AND PASSING THROUGH ALL DATA). # ALLOWS SETTING DERIVATIVE VALUES AT END POINTS # 2) SMOOTHING SPLINE AT A GIVEN TENSION BASED ON ERROR BARS AND SMOOTHING FACTOR s (KNOTS AT ABSISSA VALUES) # 3) FITTING SPLINE TO DATA WITH ERROR BARS (KNOTS SPECIFIED). DERIVATIVES AND/OR VALUES AT KNOT END POINTS CAN # BE SPECIFIED; TENSION CAN BE SPECIFIED OR FIT ALONG WITH Y VALUES AT KNOTS. self, knots = None, # IF None KNOTS ARE self.x[0], AND => INTERPOLATING OR SMOOTHING SPLINE,

# IF NOT None => FITTING SPLINE

- s = 0.0, # SMOOTHING FACTOR. s >=0.0. s=0.0 => INTERPOLATING SPLINE.

- # s IS ROUGHLY THE REDUCE CHISQ => A REASONABLE VALUE FOR S = NUMBER OF DATA POINTS # IGNORED FOR FITTING SPLINE.

- eps = None, # FOR SMOOTHING SPLINE A TOLERANCE ON THE RELATIVE PRECISION TO WHICH S IS TO BE INTERPRETED.

- # 1.0 >= eps >= 0.0. IF eps IS None, SQRT(2/NUMBER_OF_DATA_POINTS) IS USED. # IGNORED FOR FITTING SPLINE.

y0 = None, # FIX Y VALUE AT FIRST KNOT TO y0 FOR FITTING SPLINE, IF None THEN FIT FIRST KNOT Y VALUE y1 = None, # FIX Y VALUE AT LAST KNOT TO y0 FOR FITTING SPLINE, IF None THEN FIT LAST KNOT Y VALUE yp0 = None, # FIX DERIVATIVE OF Y AT FIRST KNOT TO yp0, IF None THEN FLOAT. yp1 = None, # FIX DERIVATIVE OF Y AT LAST KNOT TO yp1, IF None THEN FLOAT. tension = 0.0, # THE TENSION FACTOR. THIS VALUE INDICATES THE DEGREE TO WHICH THE FIRST DERIVATIVE PART OF THE

# SMOOTHING FUNCTIONAL IS EMPHASIZED. IF tension IS NEARLY ZERO (E. G. .001) THE RESULTING CURVE IS # APPROXIMATELY A CUBIC SPLINE. IF tension IS LARGE (E. G. 50.) THE RESULTING CURVE IS NEARLY A # POLYGONAL LINE. FOR A FITTING SPLINE IF tension = None, tension IS FIT ALONG WITH THE VALUES AT THE KNOTS.quiet = 0, # 0=PRINT RESULT SUMMARY, =1 DON’T PRINT SUMMARY ) # Returns a new Data instance, z, which is a copy of self except z.y = values of spline at self.x # Additional attributes of z: # z.tspl_coef = Array( [ x_knots, y_knots, y’‘_knots ] ) # z.tspl_tens = tension # z.tspl_chisq = chisquared for the fit # z.tspl_nu = number of fitting parameters # z.__call__ (i.e. instance can be called as a function) = z(x,ider) # where x=xarray, y=yarray,ider=derivative order(optional): 0=value,1=first # z(x,-1,b) gives the integeral of z from x to b(<=x_n).

APPLIED TO ALL SENSORS

- Additional Key Word Arguments:

- search : str.

- Apply to only those sensor with names containing this string.

- exclude : list.

- Apply to all but these sensors.